Protect Your Social Media Data from AI Training: Opt-Out Options & Privacy Tips

In today’s digital-first world, social media is central to our daily lives. But did you know AI systems can train on the data you post? From your photos to your posts, AI models analyze vast amounts of user-generated content.

This guide breaks down how different social networks handle AI training and what you can do to opt out or limit AI access to your data.

Managing AI Training Data Usage: Opt-Out vs. No Toggle Platforms

TL;DR: Quick Summary

- Platforms That Let You Opt Out: Facebook, Instagram, X (Twitter), LinkedIn, GitHub (with settings to limit AI training).

- Platforms With No User Toggle: Medium, YouTube, Reddit, TikTok (default policies restrict or allow AI training).

- Key Steps: Adjust privacy settings, delete/edit old content, use private accounts where possible.

- Legal Context: GDPR & CCPA provide some protection, but opt-outs depend on platform policies

Why Does AI Training Matter?

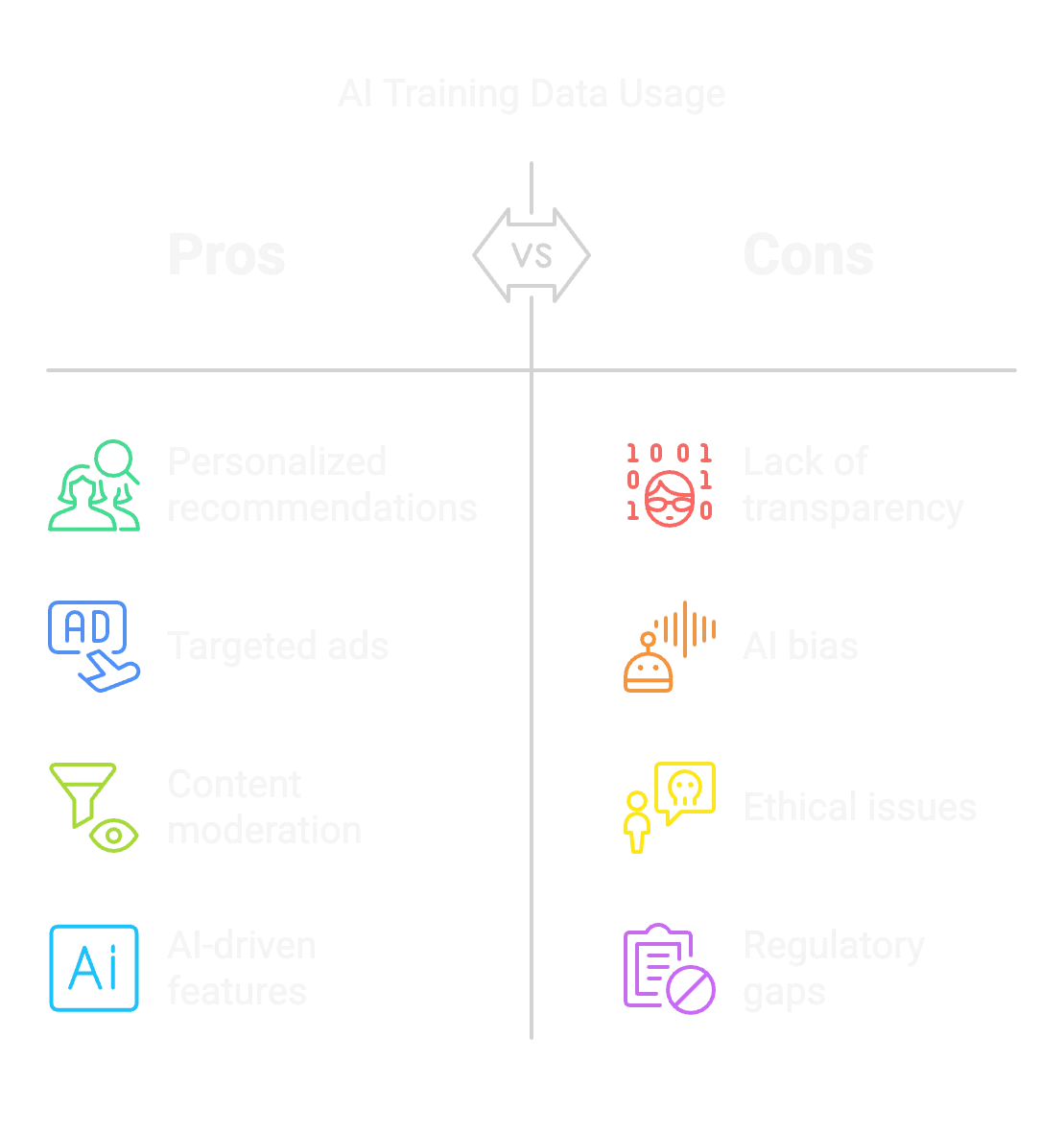

AI training enhances personalized recommendations, targeted ads, content moderation, and AI-driven features. While these benefits improve user experience, concerns arise around privacy, security, and consent.

Key Risks & Considerations

- Lack of Transparency: Some platforms use data without explicit user consent.

- AI Bias & Ethical Issues: Data misuse can lead to biased AI models.

- Regulatory Gaps: While GDPR and CCPA provide protections, enforcement varies.

Pros&Cons of Data Use in AI Training

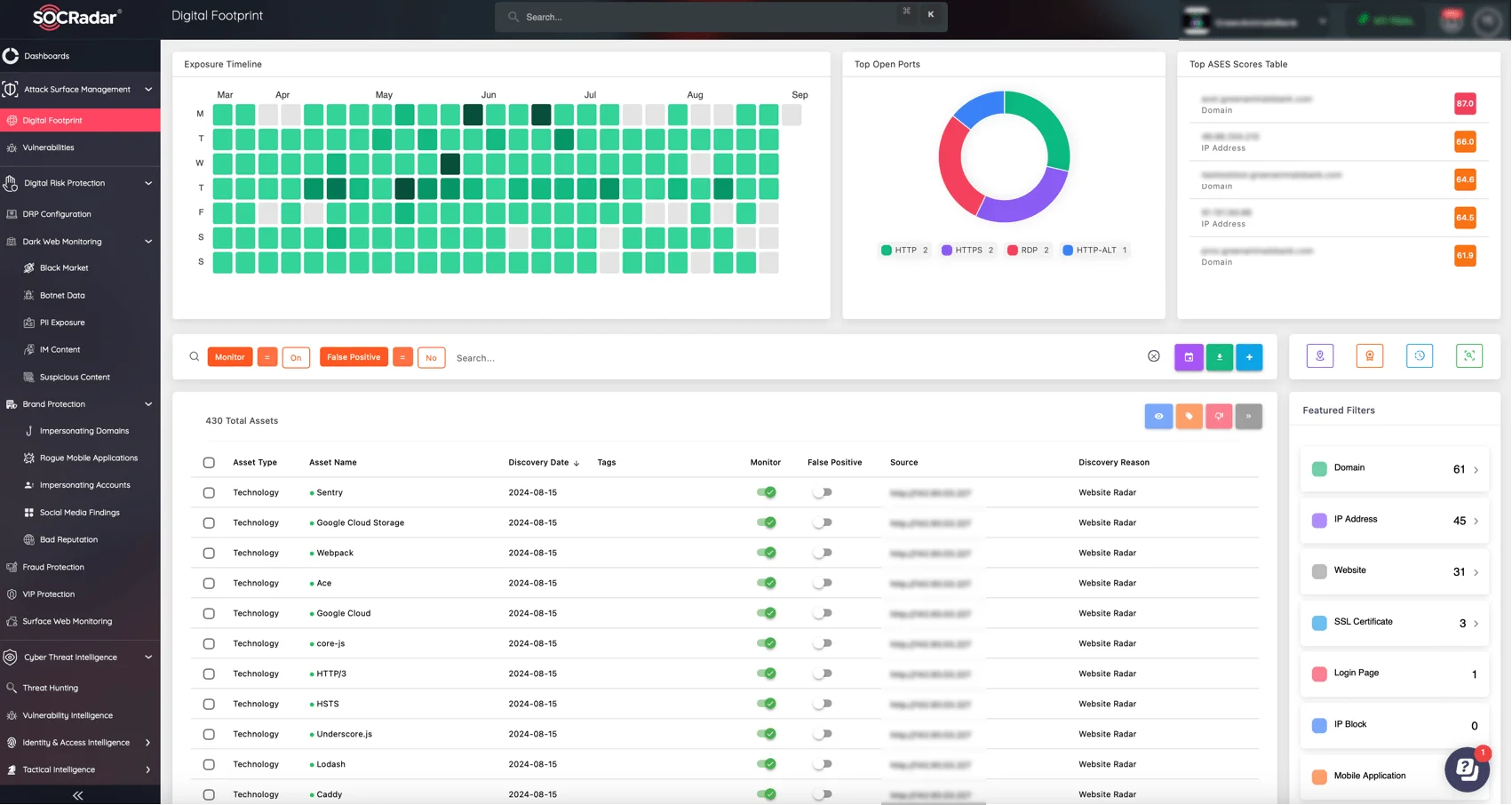

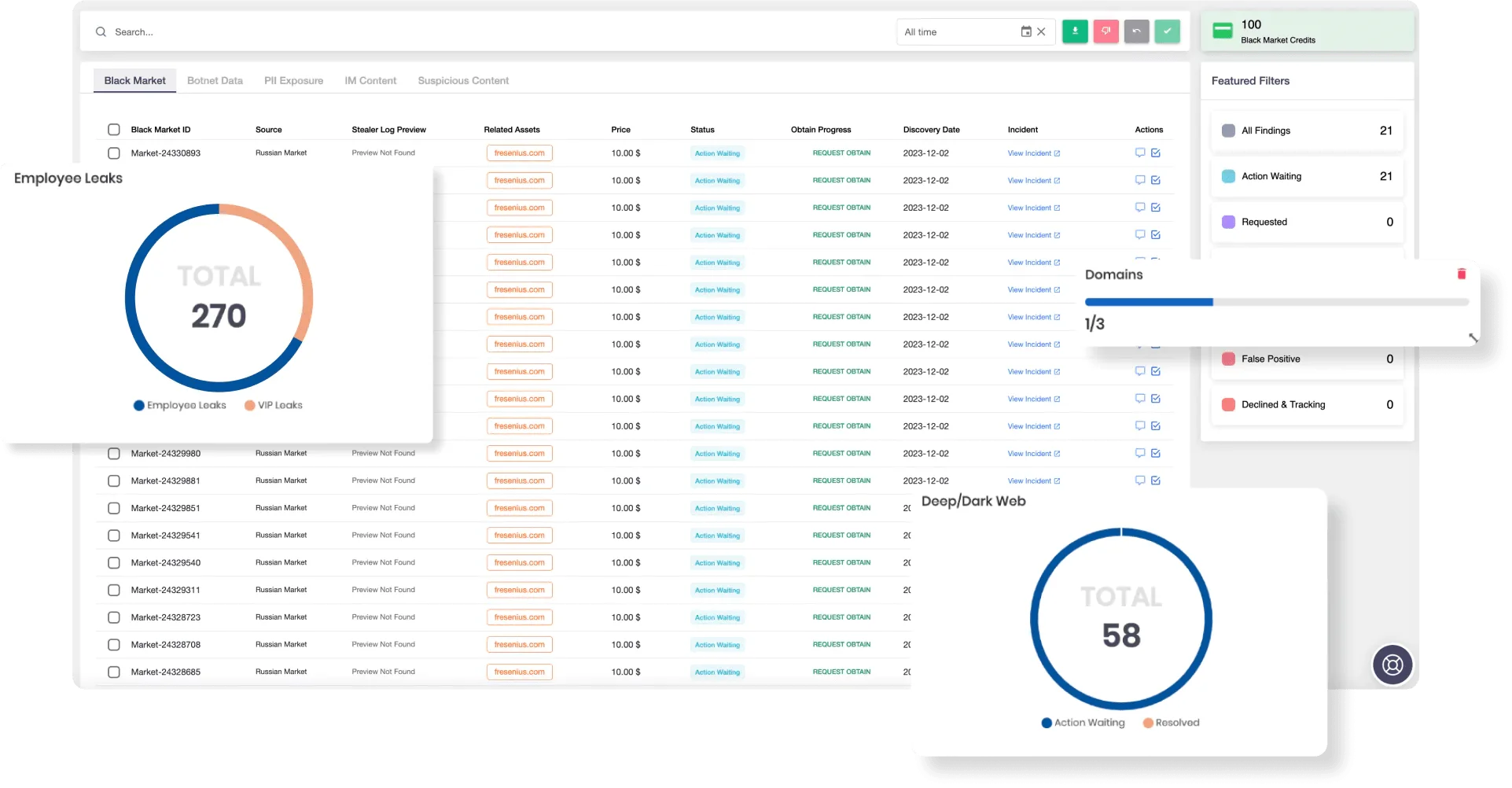

For users concerned about the broader risks of AI-powered data collection, gaining visibility into their digital footprint and monitoring online assets can provide essential layers of security. Solutions like SOCRadar’s Attack Surface Management help organizations stay ahead by offering Digital Asset Monitoring for effortless tracking of exposed assets and Digital Footprint insights to see their attack surface through the eyes of a hacker.

SOCRadar Attack Surface Management’s Digital Footprint insights

Group A: Platforms That Allow You to Opt Out of AI Training

Below are the social networks and tools that let you disable (or limit) AI training on your content. We’ve included step-by-step instructions for each.

Group A: Platforms That Allow You to Opt Out

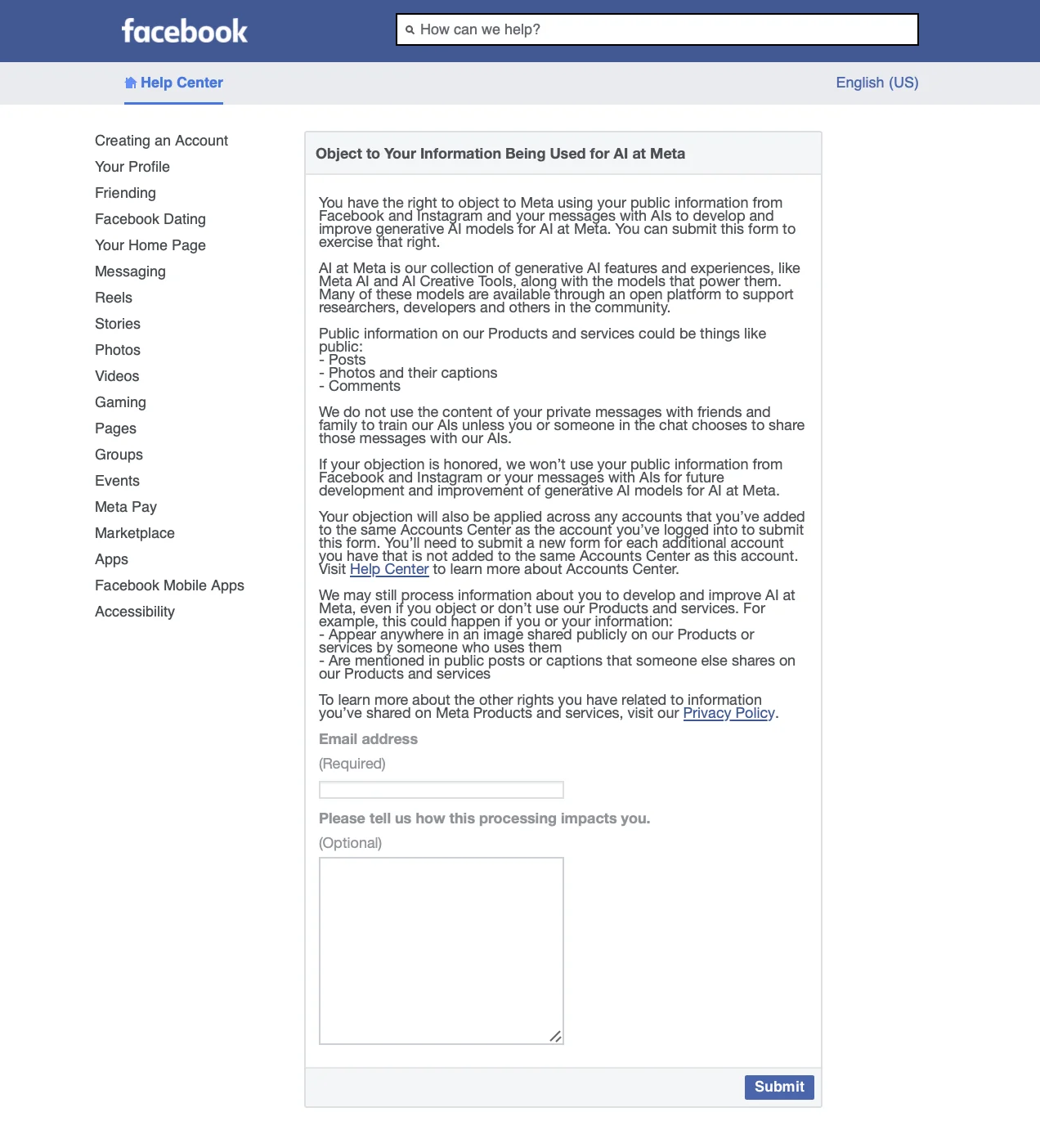

1. Meta (Facebook and Instagram)

- Default: Yes, your content may be used for AI training.

- Opt-Out Steps:

- Go to Meta Privacy Center (Facebook/Instagram settings).

- Navigate to AI Data Usage Settings.

- Submit an opt-out request and confirm via email.

Facebook – Privacy Center

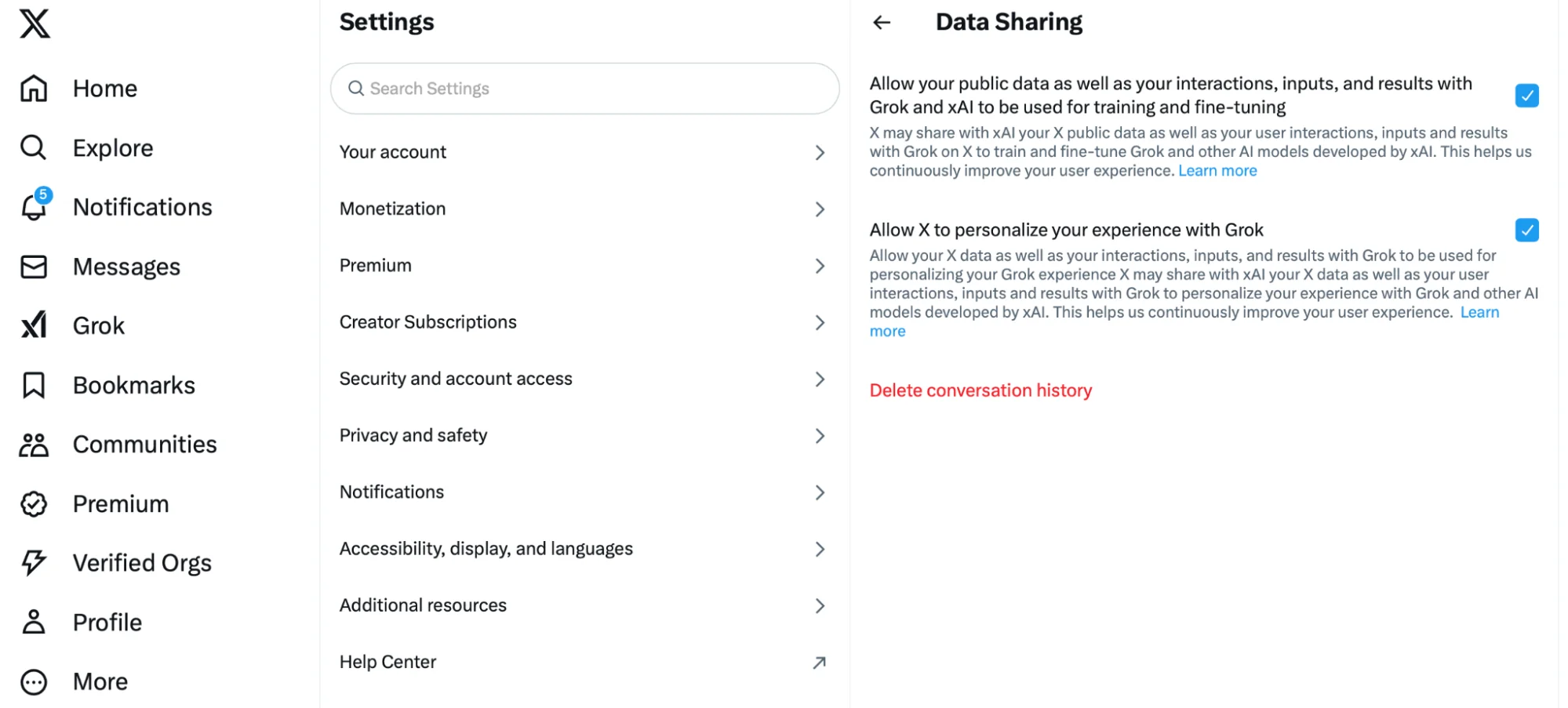

2. X (formerly Twitter)

- Default: Yes, public tweets may be used for AI training.

- Opt-Out Steps:

- Go to Settings & Privacy > Privacy and Safety.

- Select Data Sharing and AI Training.

- Toggle off Allow AI Training on My Content.

X – Settings: Data Sharing

Note: Past tweets may still be part of AI datasets.

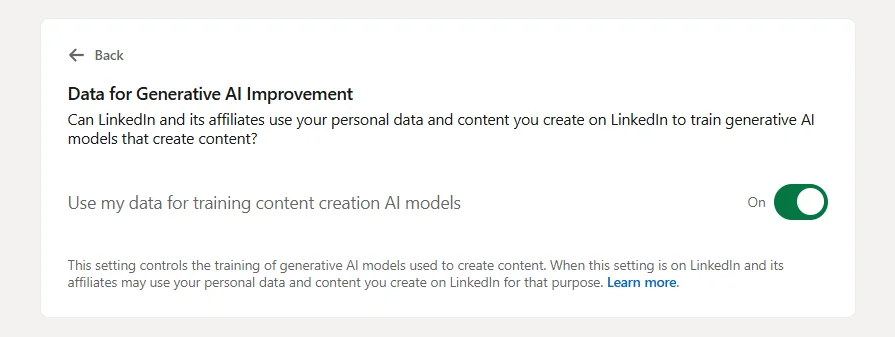

3. LinkedIn

- Default: Yes, LinkedIn may use your posts for AI training.

- Opt-Out Steps:

- Access Settings & Privacy > Data Privacy.

- Find AI Data Training.

- Toggle off Allow AI Training on My Content.

LinkedIn – Settings&Privacy -> Data Privacy

This will only prevent new data from being added to AI training sets.

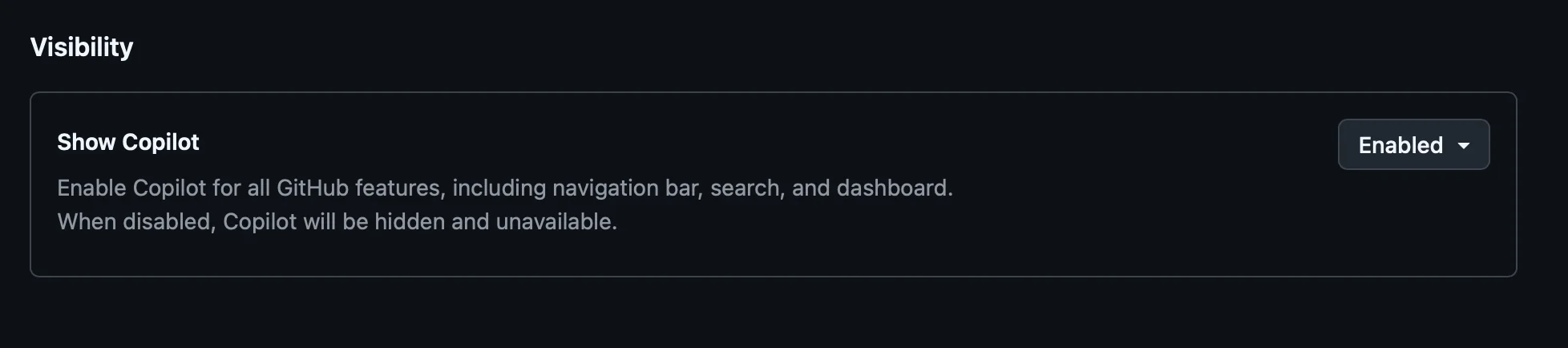

4. GitHub

- Default: Your public repositories may be used for AI model training.

- Opt-Out Steps:

- Go to GitHub Settings > Privacy.

- Find GitHub Copilot Data Usage.

- Disable Allow AI Training on My Public Repositories.

Previously trained models may still contain patterns learned from your code.

GitHub – Settings -> Privacy

Even after opting out, previously collected data may still exist in various datasets, including underground forums. SOCRadar’s Dark Web Monitoring can help detect if your data has surfaced in cybercriminal marketplaces.

SOCRadar’s Dark Web Monitoring Module

Group B: Platforms With Preset Policies (No Direct Toggle or Default Block)

1. Medium

- Default: No, Medium blocks AI training by default.

- What You Can Do: Edit or delete past content via Manage Stories.

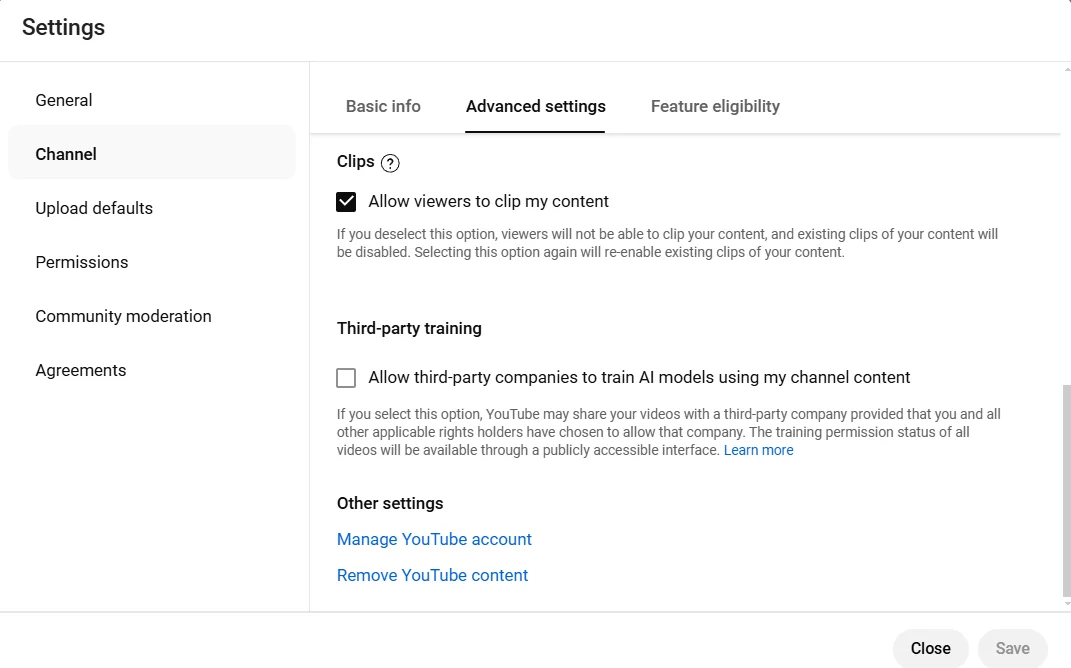

2. YouTube

Policy Overview

- Default: No, third-party AI cannot train on YouTube content unless you opt in.

- What You Can Do: Adjust preferences under Creator Dashboard > Settings > Third-party AI Training.

YouTube – Settings -> Channel -> Advanced Settings

3. Reddit

- Default: No opt-out toggle; public posts are licensed to AI companies.

- What You Can Do:

- Delete/edit old posts to remove them from datasets.

- Use private subreddits to limit exposure.

4. TikTok

- Default: No official AI-specific opt-out setting.

- What You Can Do:

- Set your account to Private.

- Delete/edit past videos.

- Disable personalized ads under Settings > Ads.

Legal Context: How GDPR & CCPA Protect You

- GDPR (Europe): Requires platforms to provide opt-out options for data collection.

- CCPA (California): Allows users to request data deletion but doesn’t always block AI training.

While these regulations help, platform-specific policies ultimately dictate AI data use.

Conclusion

AI continues to reshape the digital landscape, making it crucial to understand how your data is used and what steps you can take to protect it. Adjusting platform settings, deleting old content, or choosing services that refuse AI training by default can help you regain control over your online identity.

Beyond adjusting platform settings, individuals and businesses can take proactive steps to monitor data exposure using advanced Threat Intelligence solutions. SOCRadar’s Extended Threat Intelligence (XTI) provides deep insights into digital risks, leaked credentials, and AI-driven data exposure. Staying informed, advocating for transparent policies, and using the right tools can help balance technological progress with personal data protection.

Sources

- Reddit

- Reddit User Agreement (Effective September 24, 2024. Last Revised September 24, 2024)

- Reddit Privacy Policy (Effective: August 16, 2024. Last Revised: August 16, 2024.)

- Reddit Public Content Policy

- Medium

- Medium Terms of Service

- Medium Rules

- Stubblebine, T. (2023, September 28). Default no to AI training on your stories: Fair use in the age of AI: Credit, compensation, and consent are required. Medium. Retrieved from https://blog.medium.com/default-no-to-ai-training-on-your-stories-abb5b4589c8

- YouTube

- YouTube Terms of Service

- Perez, S. (2024, December 16). YouTube will now let creators opt in to third-party AI training. TechCrunch. https://techcrunch.com/2024/12/16/youtube-will-let-creators-opt-out-into-third-party-ai-training/

- TikTok

- Privacy Policy (Last updated: Aug 19, 2024)

- Terms of Service (Last updated: November 2023, If you are a user having your usual residence in the US)

- Meta (Facebook/Instagram)

- Meta Terms of Service

- Clark, M. (2023, September 27). Privacy matters: Meta’s generative AI features. Meta Newsroom. Retrieved from https://about.fb.com/news/2023/09/privacy-matters-metas-generative-ai-features/

- Ward, A. (2025, January 31). How to turn off Meta AI: Facebook, Instagram, and WhatsApp. Metricool. Retrieved from https://metricool.com/opt-out-meta-ai-training/

- Sprinterra. (2024, June 17). Want to stop Facebook and Instagram from using your data for AI? Read this!. Retrieved from https://www.sprinterra.com/want-to-stop-facebook-and-instagram-from-using-your-data-for-ai-read-this/

- X (Twitter)

- Koetsier, J. (2024, July 26). Here’s how to stop X from using your data to train its AI. Forbes. Retrieved from https://www.forbes.com/sites/johnkoetsier/2024/07/26/x-just-gave-itself-permission-to-use-all-your-data-to-train-grok/

- LinkedIn

- LinkedIn User Agreement

- AleaIT Solutions Pvt. Ltd. (2024). How LinkedIn quietly uses your data to train AI and steps to opt out. LinkedIn. Retrieved fromhttps://www.linkedin.com/pulse/how-linkedin-quietly-uses-your-data-train-ai-steps-opt-mqf0c/

- GitHub

- GitHub Terms of Service

- GitHub Docs on Excluding Repositories From Copilot Training