Is DeepSeek Safe? A Q&A on the Cybersecurity Risks of the AI Platform

[Update] March 3, 2025: “DeepSeek’s Training Data Exposed Nearly 12,000 Live API Keys”

[Update] February 4, 2025: “Malicious DeepSeek Packages on PyPI Exposed”

DeepSeek, a rising AI platform, has recently made headlines for both its technological advancements and its cybersecurity challenges. The platform, which quickly surpassed ChatGPT as the most downloaded AI app on Apple’s App Store, has also been targeted by a large-scale cyberattack. This incident, along with various security concerns raised by researchers, brings up critical questions about the risks associated with using DeepSeek.

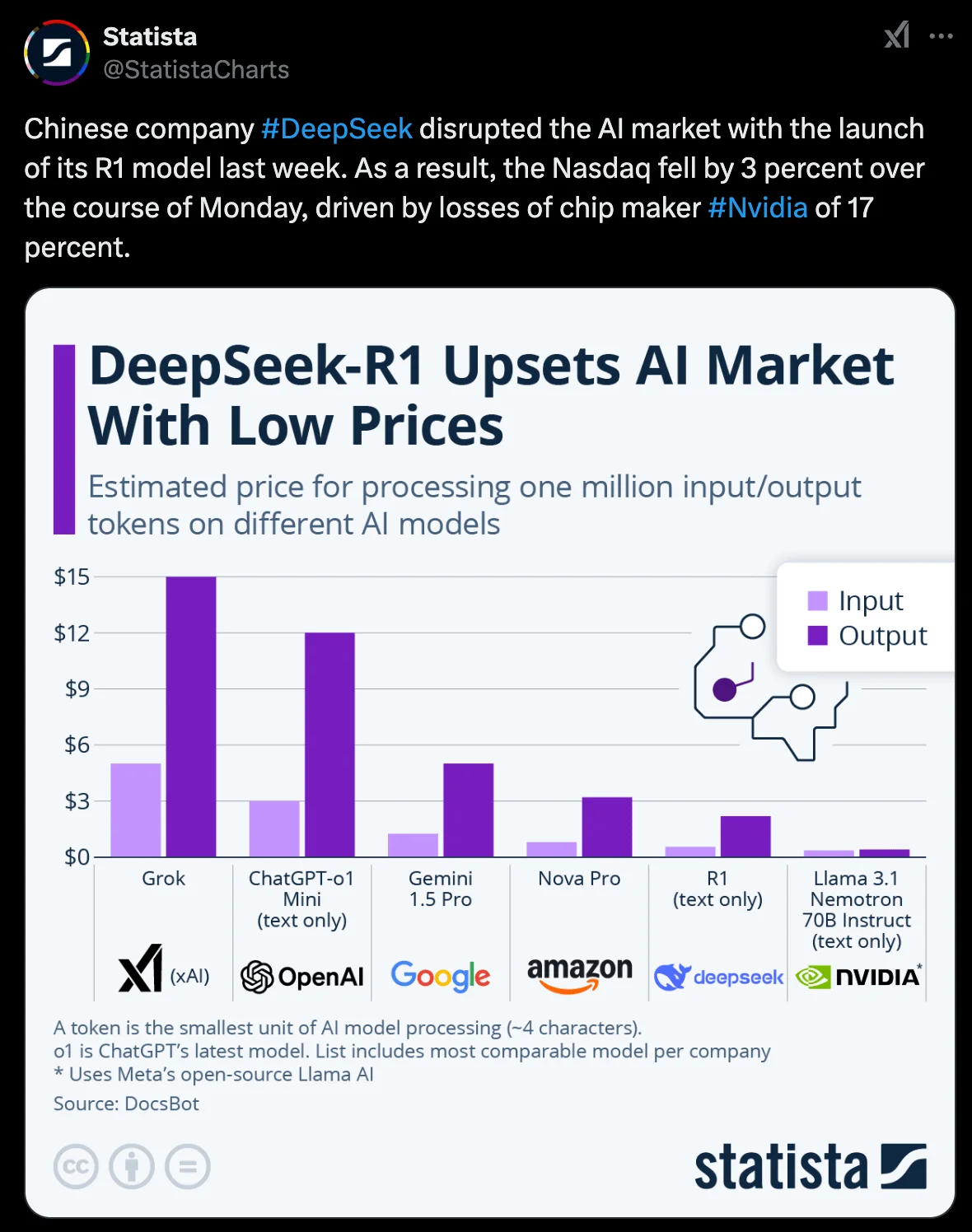

The release of DeepSeek’s free, open-source AI model also sent shockwaves through the stock market. NVIDIA shares plummeted 17%, marking the largest one-day loss ever recorded for a company in stock market history. This dramatic financial impact underscores the growing influence of AI competition and the potential for geopolitical and economic shifts. Additionally, security researchers and government authorities have begun scrutinizing DeepSeek’s privacy practices, raising concerns about the extent of data collection and potential misuse.

In this article, we will be exploring the key cybersecurity issues, incorporating insights into malicious activity around DeepSeek’s domain, its platform updates, and how it has changed the AI ecosystem.

What happened to DeepSeek?

DeepSeek recently suffered a large-scale cyberattack, believed to be a Distributed Denial-of-Service (DDoS) attack. This forced the company to disable new user registrations while maintaining access for existing users. The attack targeted DeepSeek’s API and web chat platform, causing service disruptions.

Updates on DeepSeek’s status page provided real-time details of the incident. A message read, “Due to large-scale malicious attacks on DeepSeek’s services, we are temporarily limiting registrations to ensure continued service.” Multiple monitoring efforts have been implemented to mitigate the impact, ensuring existing users remain unaffected.

A screenshot from DeepSeek’s status page, reflecting their ongoing monitoring of issues.

How does DeepSeek compare to other AI models in security?

While DeepSeek has achieved impressive performance, placing in the top ten on the Chatbot Arena benchmark rankings, security concerns surrounding it are notable. It has surpassed leading models such as OpenAI’s GPT-4 and Anthropic’s Claude, but its open-source nature and weaker safeguards make it more susceptible to misuse. As mentioned, DeepSeek has been found to generate dangerous and illegal content when manipulated using jailbreak techniques.

Additionally, DeepSeek’s open-source nature makes it more accessible to cybercriminals who may modify the model for malicious purposes. By contrast, proprietary AI models from OpenAI and Anthropic impose stricter safety mechanisms. These security gaps make DeepSeek particularly risky for enterprise applications where data confidentiality and compliance with regulations are critical.

How has DeepSeek’s rise impacted the AI industry?

Nonetheless, DeepSeek’s rapid ascent has triggered a significant reaction in the tech sector. The launch of its open-source model led to a stock market shake-up, with AI-related stocks – including NVIDIA – experiencing record losses. The company’s ability to develop a powerful AI model with a budget of less than $6 million raises concerns about whether traditional AI leaders, who invest billions into development, can remain competitive.

Moreover, DeepSeek’s use of NVIDIA’s reduced-capability H800 chips has raised questions about whether high-end AI chips will remain a necessity for training advanced AI models. Some industry analysts believe that DeepSeek’s disruptive impact may accelerate a shift toward more cost-effective AI development strategies, ultimately reshaping the AI landscape.

The chart highlights the competitive pricing of DeepSeek compared to other major AI platforms, showcasing its disruptive low-cost model. The development has become a trending discussion on social platforms. (Shared by Statista on X)

Adding to the buzz, OpenAI CEO Sam Altman acknowledged DeepSeek’s R1 model as an impressive competitor, particularly for its cost-effectiveness. In a tweet, Altman stated, “DeepSeek’s R1 is an impressive model, particularly around what they’re able to deliver for the price… it’s legit invigorating to have a new competitor!”

Sam Altman, CEO of OpenAI, has also tweeted about the trending AI platform DeepSeek (X)

Additionally, the platform’s rise has sparked government scrutiny. US officials are now evaluating its national security implications, and some lawmakers have called for restrictions on AI models developed in China. Australian authorities are also expected to issue guidance regarding the risks of using DeepSeek.

What are the main security risks of using DeepSeek?

There are multiple cybersecurity risks currently associated with DeepSeek, including:

An overview of DeepSeek security risks

- Data Security Concerns: The platform collects user data, including email addresses, device information, IP addresses, and behavioral data. This data is stored in China, which has led to concerns over national security and potential state surveillance. While the company claims to use reasonable security measures, concerns remain about potential data access by cybercriminals or government authorities. China is a high-risk country for cybercrime, increasing the likelihood of data breaches and unauthorized surveillance. Governments, including the United States and Australia, are currently assessing the data privacy risks associated with DeepSeek.

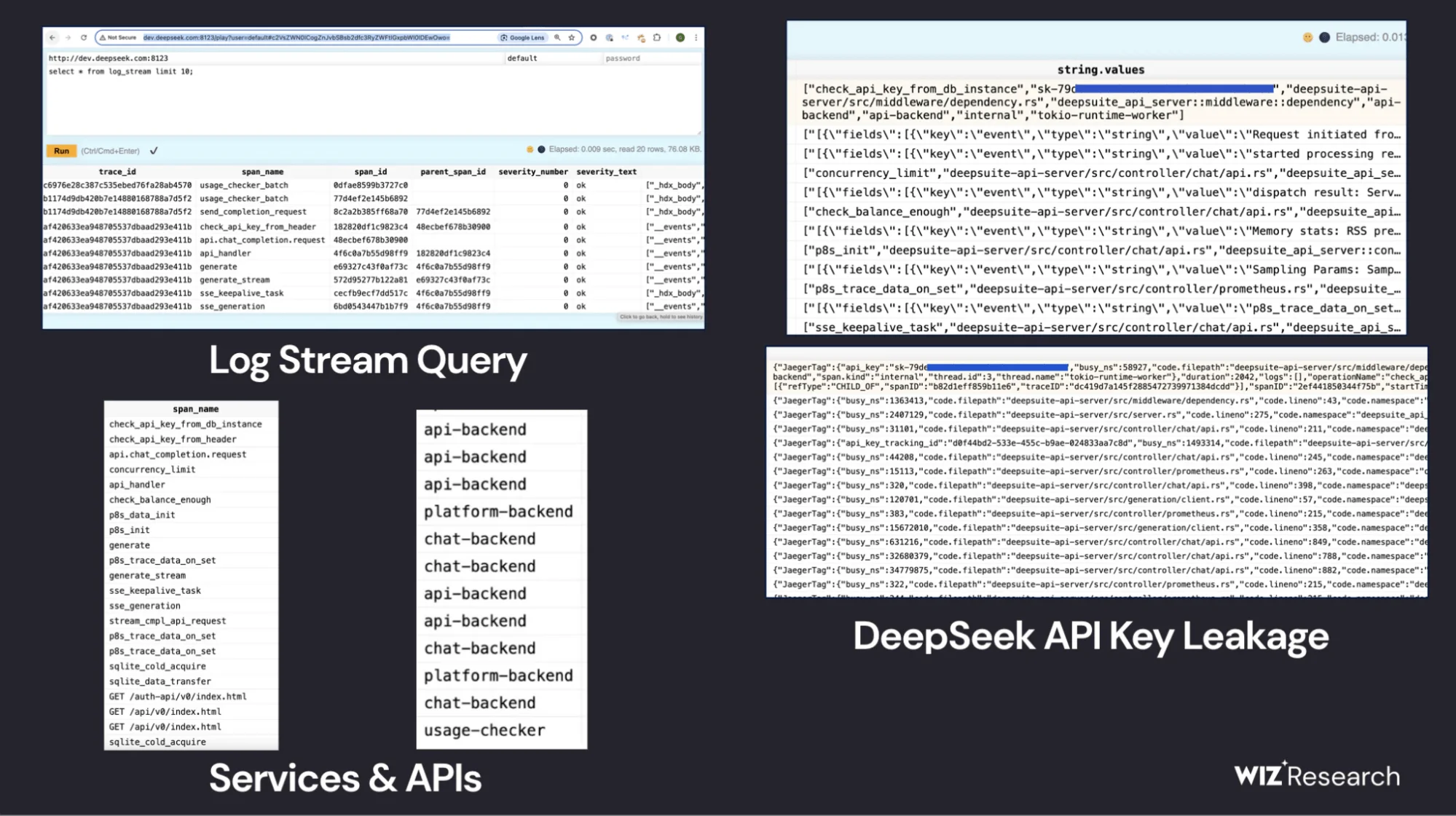

Exposed DeepSeek database showing log queries, services, APIs, and leaked API keys, posing security risks.

Recent findings have further intensified these concerns. Wiz Research uncovered a publicly exposed ClickHouse database belonging to DeepSeek, which was left open without authentication. This misconfiguration allowed unrestricted access to internal logs containing plain-text chat messages, secret keys, service data, and backend details. According to Wiz, the exposed database, dubbed “DeepLeak”, not only put sensitive information at risk but also created opportunities for data exfiltration and privilege escalation attacks.

- Fake Mobile Apps:Fraudulent versions of DeepSeek may appear on Android app stores, potentially containing malware. These fake apps can lead to unauthorized access to users’ devices, allowing cybercriminals to steal personal data or deploy ransomware.

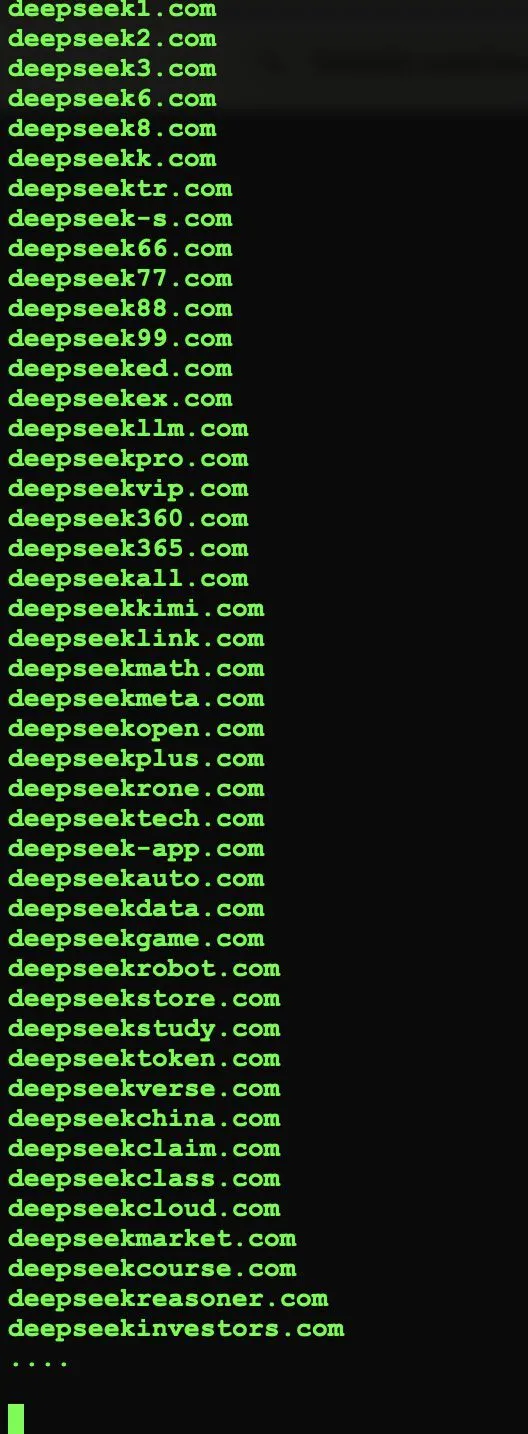

- Malicious Domains: Finding the disruptions to the platform as an opportunity, cybercriminals are creating fake websites that imitate DeepSeek, attempting to trick users into sharing sensitive data or downloading malicious software. An alarming number of domain variations such as “deepseek1.com” and “deepseekpro.com” have been registered, as shown in the malicious domain list below.

A snapshot of registered malicious domains associated with DeepSeek. Users are urged to verify they are on the official website before entering personal details.

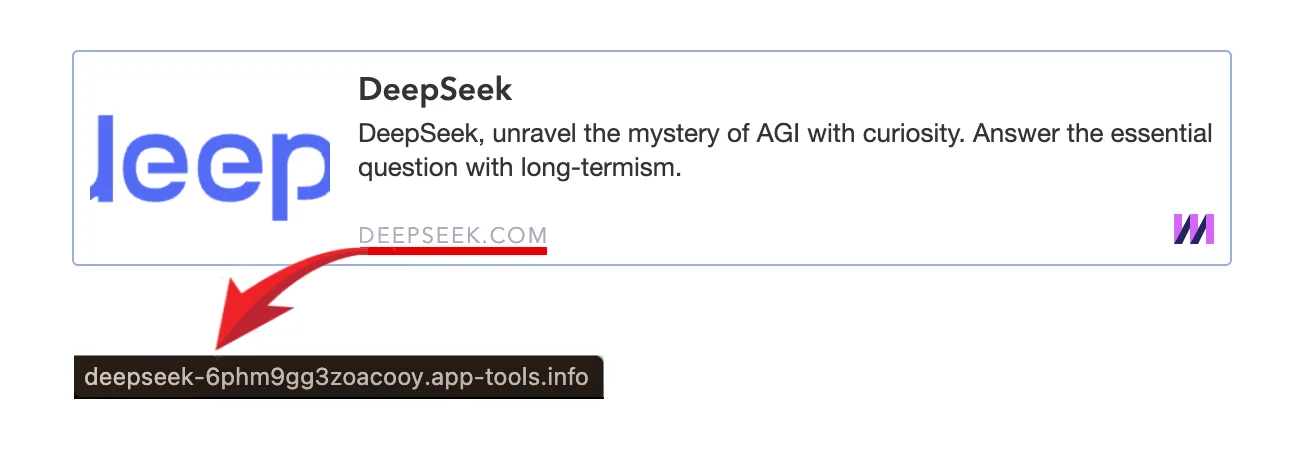

Redirecting users to a fake website impersonating DeepSeek

Another method targeting users involves a deceptive replica of DeepSeek’s homepage. Those attempting to access the platform may be redirected to an almost identical website, where the “Start Now” button is replaced with a “Download Now” button.

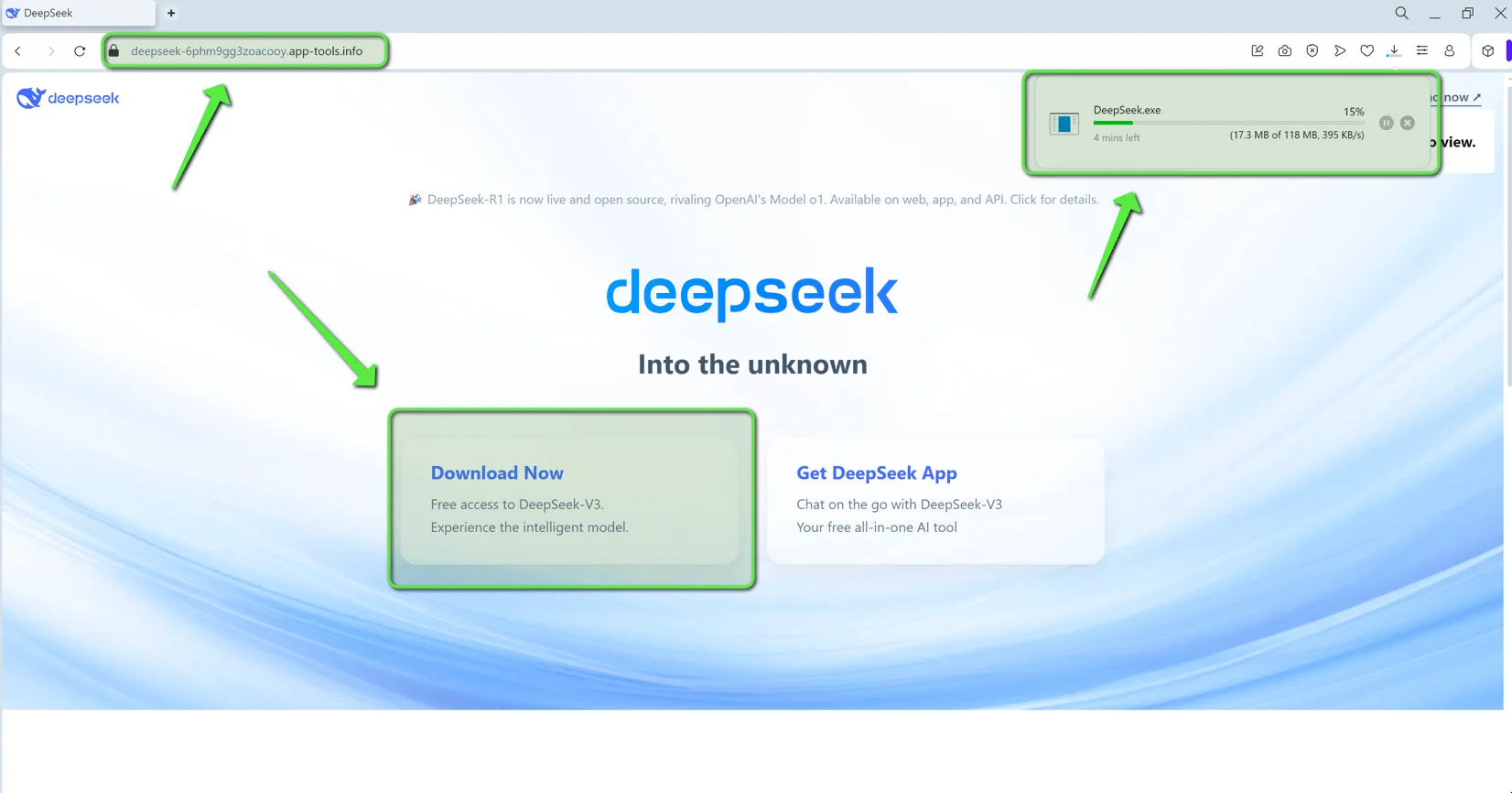

Fake DeepSeek website designed to trick users into downloading a malicious file

Clicking it triggers the download of a malicious .dmg or .exe file, which could be used for a variety of harmful purposes, such as deploying malware, gathering credentials, or enabling unauthorized access to the victim’s system. This fake website is primarily distributed through phishing emails and other deceptive tactics, broadening its potential impact

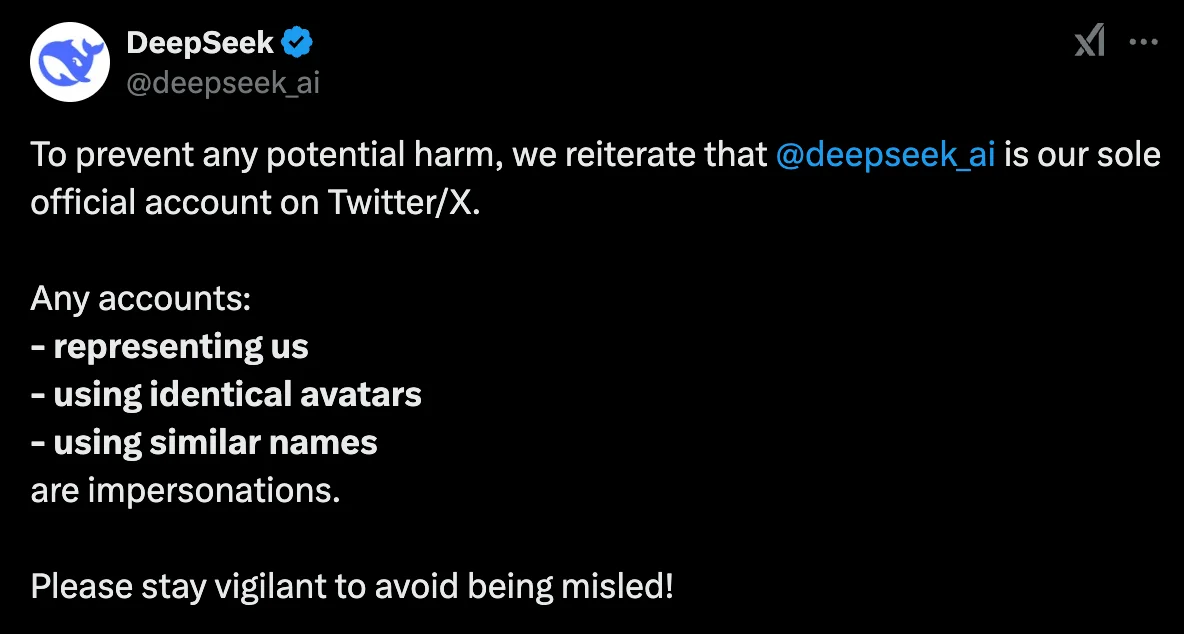

Threat actors are also creating impersonator accounts to mislead users. The company recently confirmed such incidents, emphasizing that their official account is @deepseek_ai on X.

The company warned its followers about the emergence of impersonating accounts (X)

Under the X post, a user shared a screenshot of a fraudulent account mimicking the company, highlighting the severity of this issue.

An example of an impersonator account as confirmed by the company, demonstrating how scammers use similar branding to deceive users

- AI Model Vulnerabilities & Jailbreaking Risks: Cybersecurity firm KELA has demonstrated that DeepSeek’s model can be jailbroken to generate harmful content, including ransomware development, toxic substance instructions, and fabrication of sensitive information. Such vulnerabilities expose users to significant legal and ethical risks. Unlike Western AI platforms, which implement stricter safety measures, DeepSeek has fewer guardrails preventing the generation of illegal content.

- Regulatory Challenges: Many countries have stringent AI safety regulations. Since DeepSeek lacks the strict compliance measures found in Western AI platforms, companies that integrate DeepSeek into their workflows may inadvertently expose themselves to legal and financial risks.

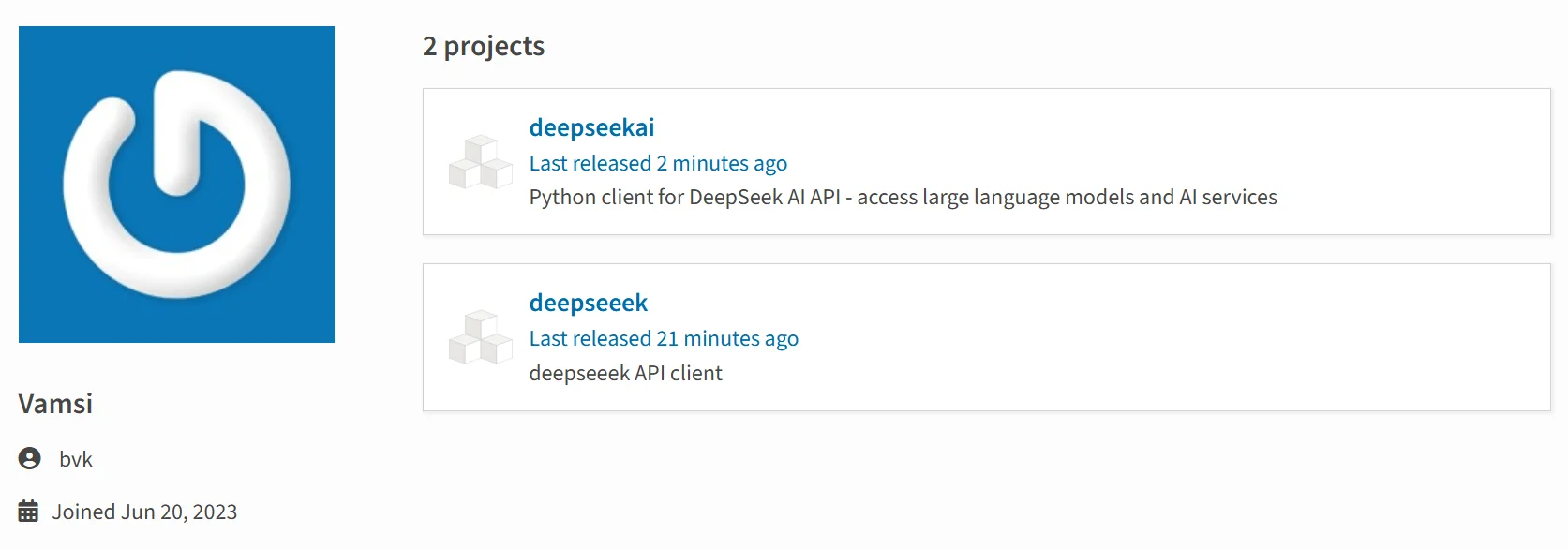

Malicious DeepSeek Packages on PyPI Exposed

Cybersecurity risks keep surfacing concerning DeepSeek, the novel AI platform, as malicious actors have exploited its growing popularity. Researchers from Positive Technologies recently uncovered a campaign on the Python Package Index (PyPI) involving fake DeepSeek-related packages, deepseeek and deepseekai. These malicious packages, uploaded by an inactive user account, aimed to steal sensitive system information and environment variables, which often contain credentials and API keys.

Now quarantined deepseekai project (PT ESC)

Once installed, the malware transmitted stolen data to a command-and-control server using AI-generated code with embedded comments to appear legitimate. Although PyPI administrators swiftly removed the packages within an hour of notification, they had already been downloaded 222 times across various countries, including the United States, China, and Russia.

Below is the IoC list provided by the researchers:

| IoC | Type |

| deepseeek | PyPI package |

| deepseekai | PyPI package |

| eoyyiyqubj7mquj.m.pipedream.net | C2 |

For further information about PyPI packages, you can check out our relevant blog post.

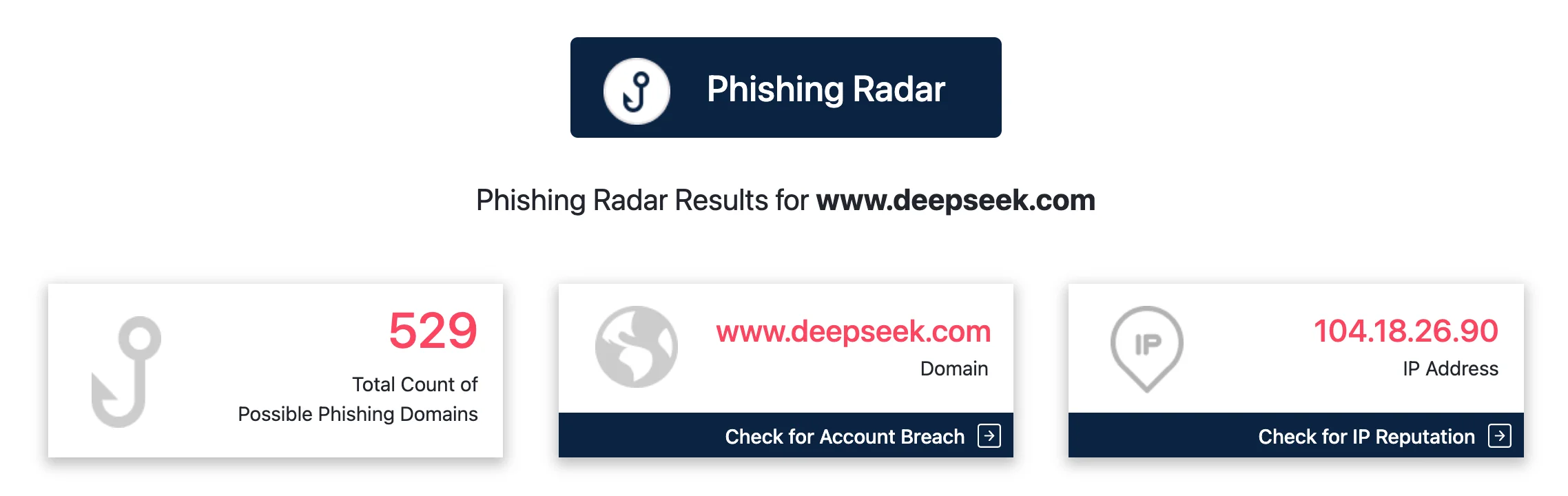

How can organizations and individuals address the phishing domain risks around DeepSeek?

SOCRadar LABS offers a free tool called Phishing Radar, which helps identify potential phishing domains. This tool, designed to protect users and businesses, has so far identified over 520 possible phishing domains associated with DeepSeek. The tool provides actionable insights, such as checking IP reputations and assessing domain security, to prevent falling victim to cybercriminal schemes.

An example from SOCRadar’s Phishing Radar, highlighting the total count of malicious domains linked to DeepSeek and actionable features for protection.

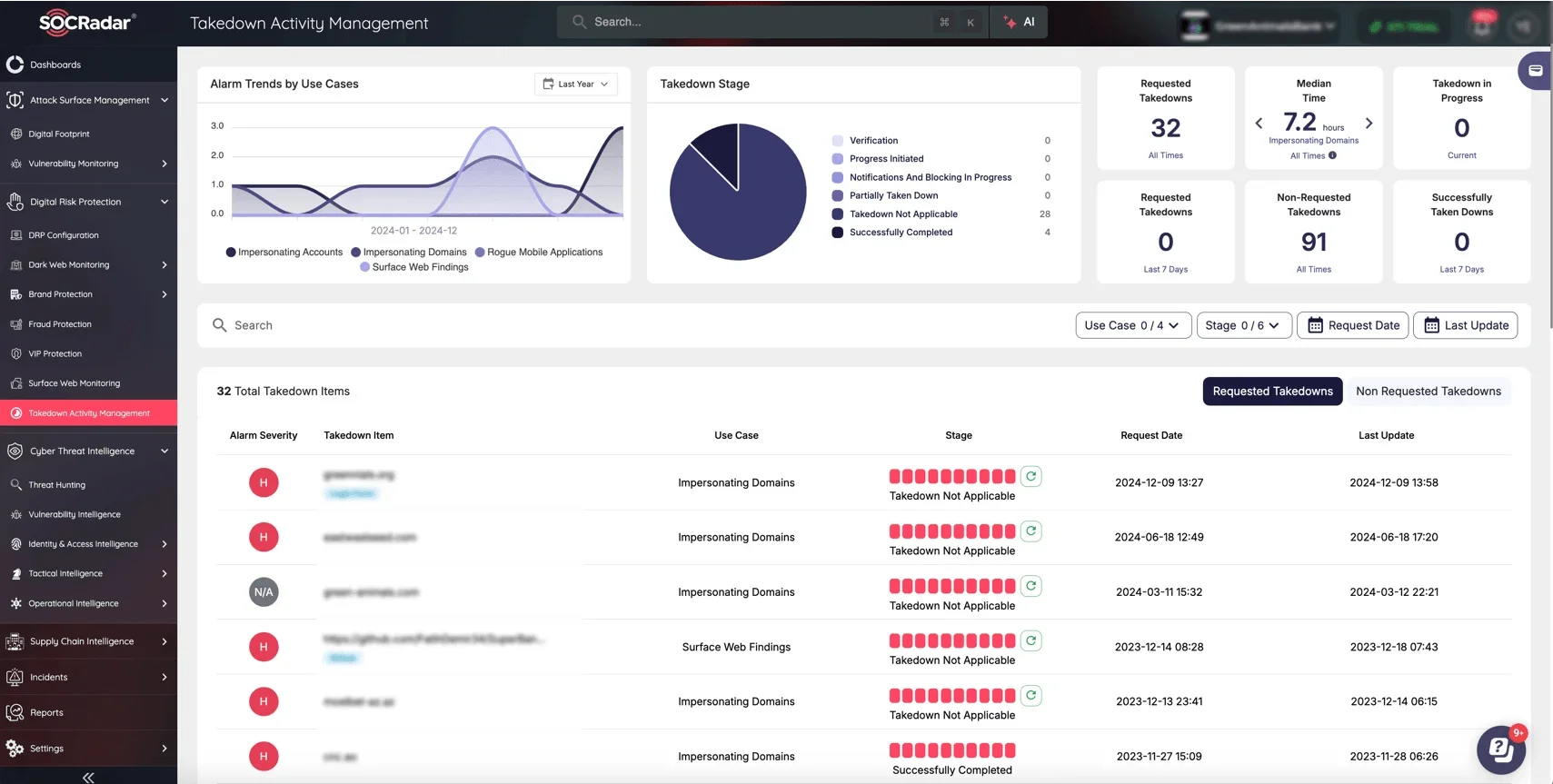

SOCRadar’s Brand Protection module is another valuable resource that helps companies protect their reputation by detecting impersonating domains early. Additionally, SOCRadar’s platform includes a Takedown Request feature, enabling businesses to act quickly against malicious domains and minimize risks effectively.

Takedown Activity Management page on the SOCRadar XTI platform

DeepSeek’s Training Data Exposed Nearly 12,000 Live API Keys

Following recent buzz about security concerns involving DeepSeek, new research has uncovered a significant vulnerability in its training data. A scan of Common Crawl – a widely used dataset for training large language models (LLMs) like DeepSeek – revealed nearly 12,000 live API keys and passwords embedded within the data. This discovery raises serious security questions about how LLMs are trained and the risks associated with generating potentially unsafe outputs.

The study involved scanning 400 terabytes of web data from the December 2024 Common Crawl archive. Key findings included:

- 11,908 active credentials granting access to various services.

- Exposed secrets found on 2.76 million web pages.

- High reuse rate – 63% of secrets appeared multiple times, including one API key that surfaced over 57,000 times across nearly 2,000 subdomains.

The presence of hardcoded credentials in training data is problematic because LLMs, unaware of the validity of such keys, might inadvertently generate insecure code or expose sensitive information when prompted. While training data is just one factor influencing model behavior, this research highlights an urgent need for improved security measures in AI development.

Industry-wide solutions, such as better filtering of training data, enhanced alignment techniques, and security-aware AI tuning, may be necessary to prevent LLMs from reproducing or exposing sensitive information. In the meantime, developers using AI-powered tools should enforce strict security practices, such as avoiding hardcoded credentials and using automated secret-scanning tools to mitigate risks.

What precautions should users take when using DeepSeek?

If you choose to use DeepSeek, consider the following security measures:

- Verify the Domain: Always access DeepSeek through its official website to avoid phishing attempts.

- Be Cautious with Mobile Apps: Download AI applications only from official stores, and check for developer authenticity.

- Use Open-Source Options: Since DeepSeek is open-source, you can host it yourself or use it through trusted platforms like Hugging Face.

- Limit Data Sharing: Be mindful of the personal data you provide and avoid linking unnecessary accounts to DeepSeek.

- Monitor Security Reports: Stay informed about emerging threats and vulnerabilities related to DeepSeek.

- Consider Alternative AI Models: If security is a top priority, using AI models from more transparent and regulated companies may be preferable.

- Apply Security Best Practices: Organizations integrating DeepSeek should conduct rigorous security audits, enforce strict access controls, and monitor data flow to mitigate potential risks.

Conclusion

DeepSeek presents an exciting advancement in AI but comes with significant security risks that users must consider. The cyberattack, the ability to jailbreak the model, and concerns over data security all highlight the importance of vigilance when using AI-driven tools. Additionally, the geopolitical and economic impact of DeepSeek’s rise suggests that the AI industry is facing unprecedented disruption.

As DeepSeek continues to evolve, users must weigh the benefits of access to an advanced AI model against the risks posed by data security concerns and potential misuse. By staying informed and taking proper precautions, users can better protect themselves from potential threats associated with DeepSeek and similar AI platforms. Furthermore, regulatory scrutiny and security research will play a crucial role in shaping the platform’s future, determining whether it becomes a valuable tool or a major cybersecurity liability.