AI vs. AI: Future of the Cybersecurity Battles

Attacks and security solutions tailored with the help of artificial intelligence (AI) have become a trending subject in the security industry as AI bots continue to improve. The power of AI has initiated an arms race between attackers and defenders.

It will be easier for hackers to launch attacks or create malware that can defeat traditional security measures as artificial intelligence and machine learning technologies become more sophisticated. The malicious actions that AI-generated malware can perform include data theft, system configuration modifications, and unauthorized code execution. The malware can also be used to launch more complex attacks like botnets or ransomware.

AI-generated malware also has an advantage in its ability to evolve and adapt over time, making it challenging for security software to identify and remove it.

The security community recognized the rise of AI-powered malware as a new threat following the development of the proof-of-concept AI malware named BlackMamba.

What is BlackMamba Malware?

BlackMamba employs generative AI to create and pursue polymorphic malware. At runtime, the malware uses a benign executable to communicate with a high-reputation AI (OpenAI) and return synthesized and polymorphic malicious code designed to steal an infected user’s keystrokes.

The use of AI in BlackMamba aims to achieve two goals:

- Retrieving payloads from a “benign” remote source rather than an anomalous C2.

- Employing a generative AI capable of delivering unique malware payloads each time.

The malware executes the dynamically generated code received from the AI within the context of the benign program using Python’s exec() function. Because the malicious polymorphic portion of BlackMamba remains in memory, its creators claim that existing EDR solutions may be unable to detect it.

Capabilities of Polymorphic Malware

Polymorphic malware is malicious software that can change its code to avoid detection by traditional antivirus solutions. This allows the malware to bypass signature-based detection methods, which rely on fixed patterns to identify known threats.

This technique allows different types of malware, including viruses, worms, bots, trojans, and keyloggers, to evade detection.

Polymorphic malware uses various tactics to evade detection, including code obfuscation, changing identifiable characteristics like file names and types or encryption keys, and behavioral adaptation.

Polymorphic malware poses a significant challenge to traditional security solutions because it can change appearance and code structure, hide as a legitimate process, and rapidly evolve, making signature-based detection and proactive defense strategies ineffective.

Preventing AI Malware Attacks

To protect against malware attacks, traditional security measures such as antivirus software, firewalls, and intrusion prevention systems have been relied on for many years. However, these solutions are ineffective against malware that can alter its code to evade detection.

This leaves systems vulnerable to sophisticated attacks. To better defend against polymorphic malware and other AI-powered cyber threats, new methods are needed that utilize advanced threat detection and response capabilities, including machine learning and behavioral analytics.

Although the use of generative AI in malware presents a new challenge, modern security vendors are aware of it and have the necessary visibility to protect the systems. Constraining malicious code to virtual memory, whether polymorphic or not, is not enough to evade a good endpoint security solution. It is still possible to overcome the challenge.

One potential solution to address the issue of deepfakes and other forms of digital manipulation is to add digital watermarks to outputs generated by AI systems like ChatGPT and DALL-E. Digital watermarks, which are unique identifiers that can be embedded in images, videos, or texts, may offer a solution to these issues. Adding watermarks to AI-generated content makes it possible to trace its origin and detect any unauthorized use or alteration. This could help address the problem of deepfakes and other forms of digital manipulation that threaten the integrity of information online.

What is the Future of AI-Powered Cyber Attacks?

While it is impossible to predict what the future holds, it is clear that threat actors will try to use these bots to create more complex and dangerous forms of malware. Other attack types are also possible using these bots. For instance, an attacker could use an AI-generated image in a phishing scheme to trick users or distribute false information to the public.

ChatGPT’s creators, like all cybersecurity researchers, are concerned about malicious parties using the technology for malicious purposes. With the release of ChatGPT 4.0, the language model has evolved to be capable of creating more complex code structures.

AI technology has already generated realistic images that can persuade people. The photograph below is not real, but it looks genuine at first sight and could deceive people.

Security Risks Associated with AI-Generated Images, Voice, and Text

Machine learning algorithms combined with massive amounts of human-generated content have paved the way for new possibilities. With this combination, systems can generate realistic images from captions, synthesize speeches in a specific person’s voice, replace someone’s face in a recording as seen in deepfake videos, or even write an entire article from a simple title prompt.

The Dual Impact of AI in Cybersecurity: Threats and Opportunities

The emergence of AI-generated malware is a recent development, and its full capabilities and potential threats are still being investigated by researchers. With ongoing advancements in AI technology, attackers will probably take advantage of these advancements to design even more sophisticated and harmful malware.

However, it’s not just cybercriminals who can benefit from AI bots. Security researchers and other professions alike in blue teams can also use these tools to improve their operations and stay ahead of the latest threats.

Microsoft’s Security Copilot, powered by GPT-4, is a new example of AI’s capabilities in cybersecurity. This tool is specifically designed to assist incident response teams in rapidly detecting and reacting to potential security threats.

With the help of AI, Security Copilot can analyze enormous amounts of data and offer valuable insights into possible security risks. This tool highlights AI’s potential to help defenders stay ahead of cyber attackers in the ever-evolving cybersecurity landscape.

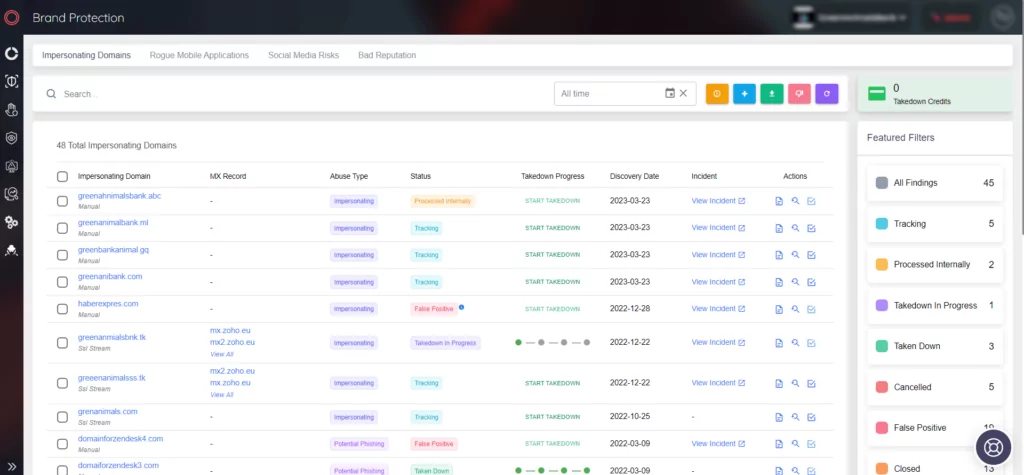

Mitigating ChatGPT’s Potential Security Risk with SOCRadar’s Phishing Domain Detection

ChatGPT’s ability to create web pages poses a potential security risk, as attackers could utilize it to create phishing websites that impersonate legitimate ones in order to steal sensitive information from unsuspecting victims. However, with the help of SOCRadar’s phishing domain detection solution, such websites can be identified and blocked before they cause any harm.

By proactively using SOCRadar, you can protect your online assets and prevent your users from falling prey to phishing attacks. It is important to remain vigilant and take proactive measures to ensure the security of your online presence.

References: