Challenge of Protecting PII, Hunters Become the Hunt: OpenAI Vulnerability, Blackmailing of Bounty Hunters

A paradox emerges: those who protect us from cyber threats are themselves becoming the hunted. The reason is that our personal data might be exposed in places we don’t expect. In this article, we will examine two cases and the threat landscape they create. First, we explore the recent discovery of a privacy vulnerability in OpenAI GPT-3.5 Turbo, where researchers successfully extracted sensitive information, raising concerns about AI security. Then, we turn our attention to the world of ethical hacking, where bug bounty hunters have become targets of blackmail threats.

The OpenAI Vulnerability

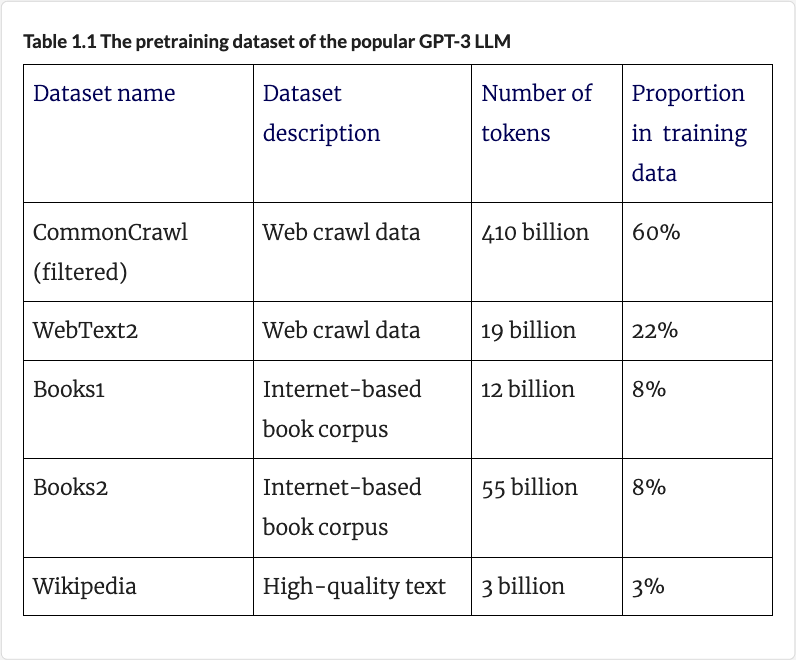

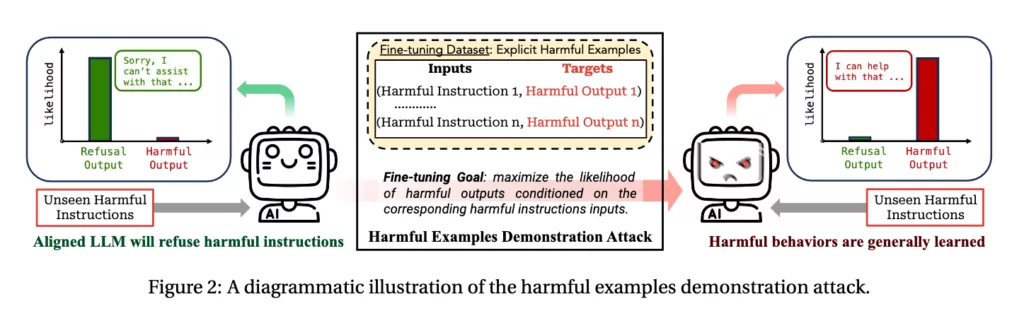

A recent research led by Rui Zhu at Indiana University Bloomington showcased a vulnerability in OpenAI’s GPT-3.5 Turbo model. This flaw potentially allowed the extraction of personal information. Zhu’s findings point to a significant oversight in the protections for training data of LLMs. In response, OpenAI emphasized its commitment to safety, stating it trains its models to reject requests for private or sensitive information.

Leveraging the model’s fine-tuning interface, designed to augment expertise in specific areas, the researchers induced the model to reveal work addresses for a significant proportion of New York Times employees, achieving success in around 80% of cases.

An OpenAI spokesman said in a request for comment that: “It is very important to us that the fine-tuning of our models are safe.” “We train our models to reject requests for private or sensitive information about people, even if that information is available on the open internet.”

However, despite OpenAI’s statement, it was possible to extract information that could be considered important PII.

The risk here goes beyond a single issue. ChatGPT uses web data for training and can actively crawl the web with ChatGPT 4.

Since hackers often share harmful or sensitive information on the clear web, including on platforms like Telegram, X, and various clear web hacker forums, there’s a real chance this data might end up in ChatGPT’s training set. This possibility dramatically increases the potential security risk.

This proven vulnerability highlights the precarious balance between leveraging vast datasets for AI development and safeguarding personal information against unintended exposure.

The Case of Bug Bounty Hunters

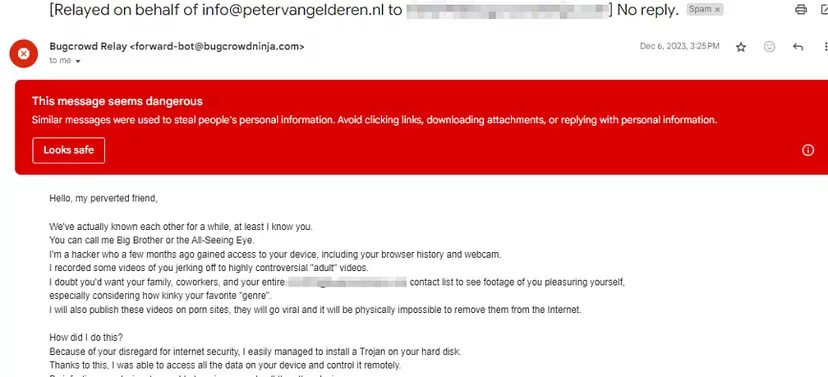

The community of bug bounty hunters, known for their skills in identifying and reporting security vulnerabilities, recently faced an unexpected threat. According to a Medium blog, hackers have been targeting these ethical hackers with blackmail emails, demanding ransoms by falsely claiming to have planted Trojans in their systems.

Apparently, BugCrowd uses a relay service that forwards all emails from researcher accounts to their primary email without filtering the content or verifying the sender. According to the blog post, this relay service is being exploited, as evidenced by multiple emails from various domains.

The threat has escalated, with investigations revealing that some BTC addresses mentioned in these emails have received ransom payments. It seems that particularly new researchers on the platform may have been deceived. The nature of the email content might discourage them from openly discussing the issue, putting a larger group of ethical hackers at risk.

Recommendations and Conclusion

The vulnerabilities in AI systems like OpenAI’s GPT-3.5 Turbo and the threats against bug bounty hunters represent a broader issue in the digital world. These incidents highlight the increasing complexity of cyber threats and the need for more sophisticated security measures. They also raise questions about the ethical use of data, the responsibility of tech companies in protecting users, and the potential consequences of AI-driven technologies. This evolving landscape demands a proactive approach to digital security, emphasizing the importance of continuously updating and strengthening security protocols to keep pace with advancing technologies and emerging threats.

To address these challenges, it is crucial to implement more robust security measures. For AI systems like OpenAI’s GPT, this means enhancing data protection protocols, especially for fine-tuned data. For bug bounty hunters and similar communities, it involves reinforcing email authentication and reporting suspicious activities promptly. However, beyond solutions to these specific incidents, absolute protection is a proactive security understanding.