Top 10 AI Deepfake Detection Tools to Combat Digital Deception in 2025

Deepfake technology has advanced to the point where distinguishing real content from AI-generated media is becoming increasingly difficult. AI can now create highly realistic images, videos, and voices, making it a powerful tool for both innovation and deception. While deepfakes have legitimate uses in entertainment and creative industries, they also pose significant threats, including misinformation, fraud, and identity theft.

As a response to these growing concerns, AI deepfake detection tools have been developed to help identify and prevent manipulated content. These tools use cutting-edge technologies such as machine learning, computer vision, and biometric analysis to detect alterations in digital media.

AI image of AI deepfake detection tools (by DALL-E)

Threat actors, including state-sponsored groups and cybercriminal organizations, are increasingly using AI-generated deepfakes to enhance their attacks. By leveraging deepfake videos, synthetic voices, and AI-generated content, these adversaries can conduct highly convincing social engineering campaigns, spread misinformation, and manipulate public perception.

AI is being used by nation-state actors such as those from Iran, China, North Korea, and Russia to enhance their cyber operations. These groups use deepfakes for phishing, reconnaissance, and information warfare. Iranian actors, for instance, have been found using AI-generated videos and voices to impersonate officials, while North Korean hackers have reportedly used fake job interview videos to infiltrate Western companies.

As deepfake threats continue to evolve, organizations must adopt AI-powered detection tools to counteract AI-driven cyber deception. Identifying manipulated content before it spreads is crucial to prevent fraud, misinformation, and large-scale cyber threats.

Read more about How Threat Actors Are Leveraging AI for Cyber Operations.

What is an AI Deepfake Detector?

An AI deepfake detector is a software tool that identifies manipulated digital media, including altered images, videos, and synthetic audio. These tools use advanced machine learning algorithms, computer vision, and forensic analysis to detect signs of AI-generated content.

Deepfake detectors analyze various factors to determine whether content has been altered, including:

- Facial inconsistencies (unnatural eye movements, lip-sync mismatches, skin texture anomalies)

- Biometric patterns (blood flow analysis, voice tone variations, and speech cadence)

- Metadata and digital fingerprints (tracing the origin and manipulation history of files)

- Behavioral analysis (analyzing patterns in how AI-generated content differs from real human speech and expressions)

These tools are used in cybersecurity, journalism, law enforcement, and identity verification to prevent fraud, misinformation, and digital manipulation.

Why Deepfake Detection is More Important Than Ever

Deepfake technology is being used in increasingly sophisticated fraud schemes, making it harder for individuals and businesses to distinguish real from fake. Scammers have used AI-generated voices to impersonate executives, leading to financial losses, while cybercriminals exploit deepfakes for identity theft and phishing attacks. Businesses that rely on voice authentication and digital verification must now implement detection tools to protect sensitive data and prevent fraud.

Beyond financial risks, deepfakes pose a growing threat to media integrity and public trust. Manipulated videos and AI-generated speeches can be used to spread false information, particularly during elections or political events. With social media accelerating the spread of digital content, ensuring that news organizations and platforms can verify the authenticity of videos and images is more important than ever.

In industries such as banking, law enforcement, and cybersecurity, deepfake detection is crucial for preventing unauthorized access and maintaining secure authentication systems. Many organizations now use AI-powered tools to analyze biometric data, verify identities, and detect synthetic media before it can cause harm. As deepfake technology advances, having reliable detection solutions will be essential for maintaining trust and security in an increasingly AI-driven world.

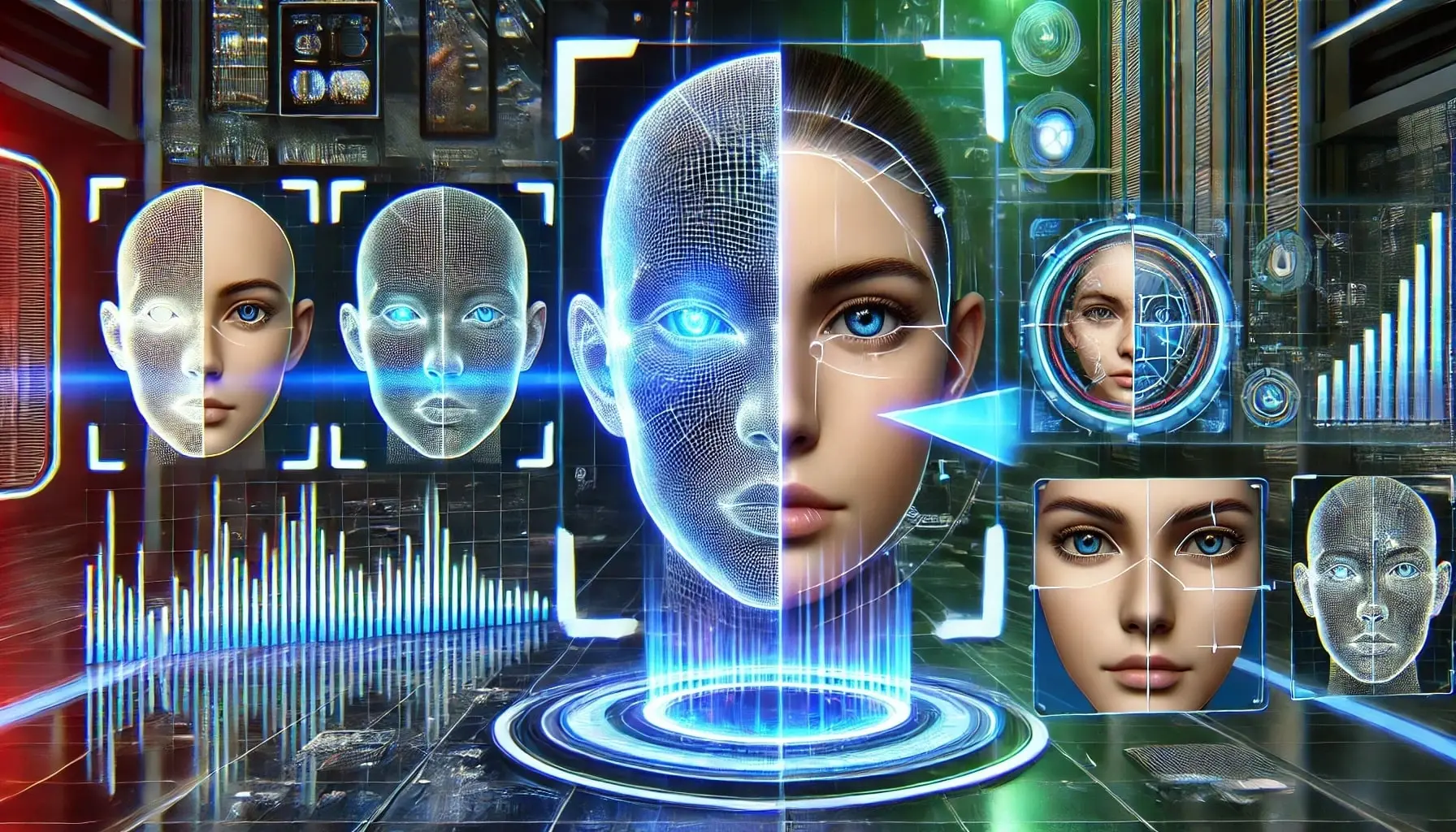

Protecting Financial Data with SOCRadar’s Fraud Protection

With AI-powered fraud on the rise, SOCRadar’s Fraud Protection provides real-time monitoring to detect compromised financial data before it’s exploited. By scanning dark web marketplaces and carding forums, it helps businesses prevent financial losses and respond swiftly to emerging threats.

SOCRadar Fraud Protection module

Unlike traditional fraud detection, SOCRadar offers instant alerts and proactive defense, ensuring customer trust and security against evolving cyber risks. In today’s digital landscape, staying ahead of AI-driven threats is essential.

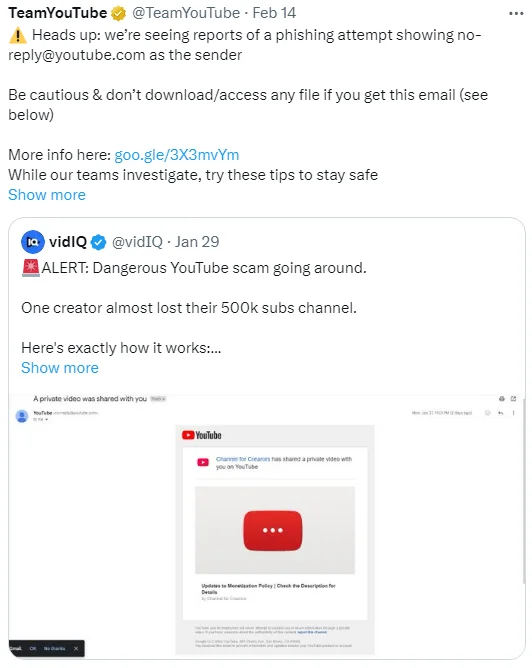

AI-Generated Phishing Attacks: The YouTube CEO Scam

One recent example of how deepfake technology is being weaponized is a phishing scam that targeted YouTube creators. Scammers used an AI-generated video of YouTube’s CEO, Neal Mohan, falsely announcing monetization policy changes. The attackers privately shared the video with targeted users, instructing them to follow a link to “confirm” their compliance with the new policies. In reality, the link led to a phishing site designed to steal login credentials.

YouTube alert regarding the phishing attempt (Source: X)

This case highlights how AI-generated content can create highly convincing scams, making it increasingly difficult for individuals to distinguish real from fake. YouTube responded by warning users not to trust private videos claiming to be from the company and urging them to avoid clicking on unverified links.

Read more about recent deepfake scams and their effects in CISO Guide to Deepfake Scams.

1. OpenAI’s Deepfake Detector

OpenAI has introduced a deepfake detection tool capable of identifying AI-generated images with remarkable accuracy. Specifically, it can detect images produced by OpenAI’s DALL-E 3 with a success rate of 98.8%. However, its effectiveness drops when analyzing images created by other AI tools, currently flagging only 5-10% of them.

The tool is being tested by select disinformation researchers and still not publicly released. It operates on a binary classification system, simply determining whether an image is AI-generated or not. This high accuracy is partly due to OpenAI embedding tamper-resistant metadata into DALL-E 3 images, following the Coalition for Content Provenance and Authenticity (C2PA) standard. This metadata serves as a “nutrition label” for digital media, improving content traceability.

Beyond detection, OpenAI is also working on watermarking AI-generated sounds and joining industry-wide efforts, such as C2PA, to promote digital content authenticity. While its tool is a promising step forward, experts agree that deepfake detection remains an ongoing challenge, with no single solution guaranteeing foolproof results.

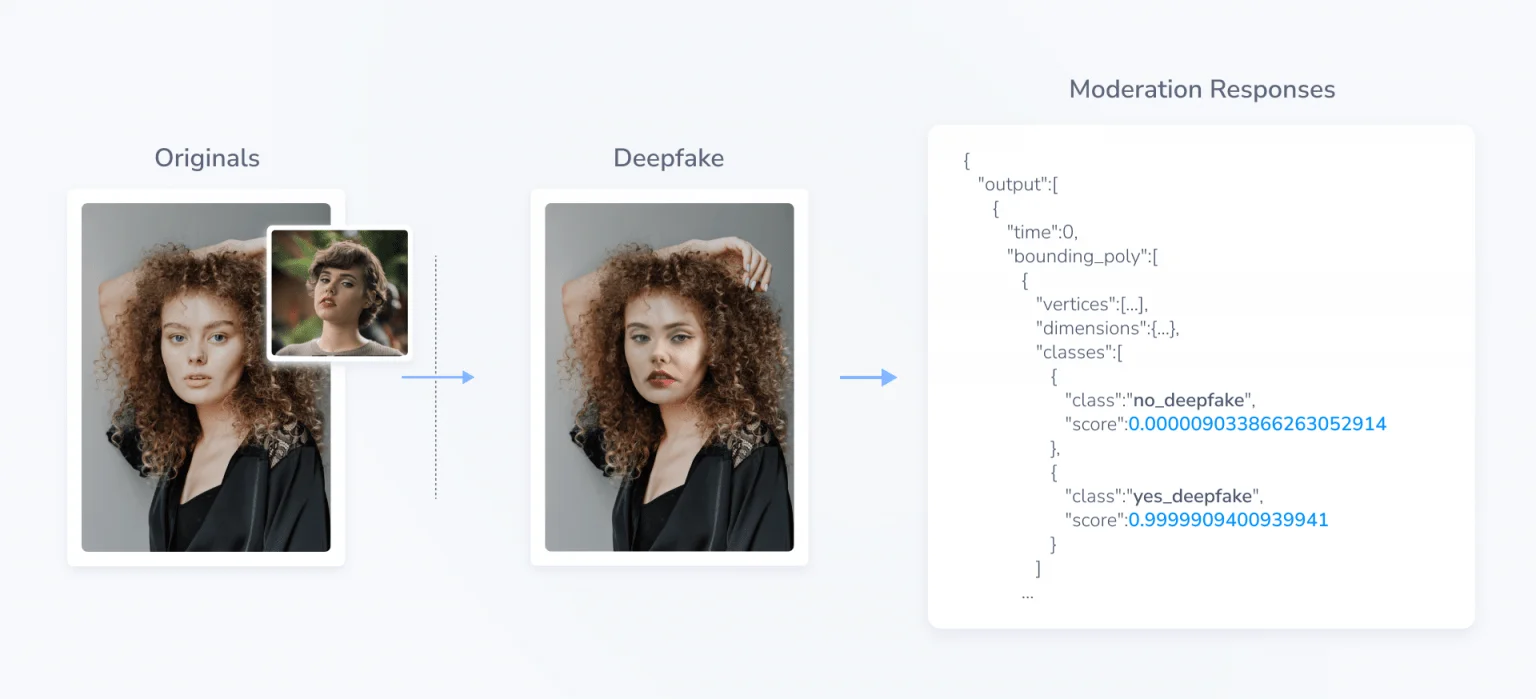

2. Hive AI’s Deepfake Detection

Hive AI has developed a powerful Deepfake Detection API designed to identify AI-generated content across images and videos. This tool is particularly useful for content moderation, helping digital platforms detect and remove deepfake media, including non-consensual deepfake pornography and AI-generated misinformation.

Example of Hive AI’s Deepfake Detection (Source: Hive)

Hive’s model works by first detecting faces in an image or video frame. It then applies a classification system, labeling each face as either “yes_deepfake” or “no_deepfake” with a confidence score. Trained on a diverse dataset of synthetic and real videos—including genres commonly associated with deepfakes—Hive’s technology can spot AI-manipulated content with high accuracy, even when it appears highly realistic to the human eye.

Recognizing the national security implications of deepfakes, the U.S. Department of Defense has invested $2.4 million in Hive AI’s detection tools. The company was selected from a pool of 36 firms to help the Defense Innovation Unit counter AI-powered disinformation and synthetic media threats. While Hive’s technology represents a significant step in deepfake detection, experts note that no tool is foolproof, and adversaries can still find ways to bypass detection.

Beyond defense applications, Hive AI’s Deepfake Detection API is also being integrated into video communication platforms, identity verification services, and NSFW content moderation systems, ensuring that AI-generated deception is caught before it spreads. As deepfake technology evolves, Hive AI continues to update its models, making it one of the leading solutions in the fight against digital forgery.

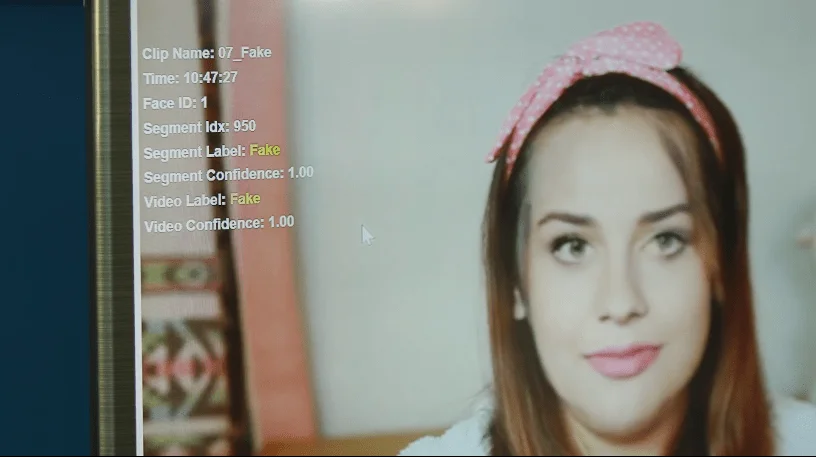

3. Intel’s FakeCatcher

Intel’s FakeCatcher is the world’s first real-time deepfake detector that analyzes biological signals to determine the authenticity of a video. Unlike traditional AI-based deepfake detectors that rely on inconsistencies in facial movements or pixel anomalies, FakeCatcher uses Photoplethysmography (PPG)—a technique that detects subtle changes in blood flow from video pixels. This approach allows it to differentiate between real and AI-generated videos within milliseconds.

Fake video detected by FakeCatcher (Source: Intel)

FakeCatcher runs on 3rd Gen Intel® Xeon® Scalable processors, supporting up to 72 real-time deepfake detection streams simultaneously. Intel claims that its model achieves a 96% accuracy rate under controlled conditions and 91% accuracy when tested on “wild” deepfake videos. The system also analyzes eye movement patterns, as deepfakes often display unnatural eye alignment.

Potential applications of FakeCatcher include:

- Media & Broadcasting: Verifying the authenticity of third-party footage in news production.

- Social Media: Screening user-generated videos for AI manipulation.

- Content Creation Tools: Integrating real-time detection into video editing software.

- AI for Social Good: Providing a widely accessible platform for verifying video authenticity.

While FakeCatcher shows promise, independent researchers caution that its effectiveness in real-world scenarios needs further validation. Factors such as video resolution and lighting can impact detection accuracy, and the system does not analyze audio. Nevertheless, Intel’s FakeCatcher represents a major leap forward in deepfake detection by leveraging human biological traits to separate reality from AI-generated deception.

4. Sensity

Sensity AI is a comprehensive deepfake detection platform that uses advanced AI-powered technology to analyze videos, images, audio, and even AI-generated text. With an accuracy rate of 95-98%, Sensity has positioned itself as a leading solution for businesses, government agencies, and cybersecurity firms looking to combat AI-driven fraud and misinformation.

Sensity deepfake detection main page

Key Features:

- Multimodal Detection – Sensity can detect face swaps, manipulated audio, deepfake videos, and AI-generated images at scale.

- Real-Time Monitoring – The platform continuously monitors over 9,000 sources to track malicious deepfake activity.

- KYC & Identity Verification – Integrated with an SDK and Face Manipulation Detection API, Sensity strengthens Know Your Customer (KYC) processes and prevents identity theft through liveness checks and face-matching technology.

- Cross-Industry Applications – Used in law enforcement, media verification, cybersecurity, and digital forensics, offering real-time assessments on digital media.

- User-Friendly & Scalable – Available as a web app, API, and SDK, making it accessible for both developers and non-technical users.

Sensity has 98% accuracy, having detected over 35,000 malicious deepfakes in the last year alone. It also offers educational resources to help employees and investigators recognize deepfake threats, making it a one-stop solution for businesses prioritizing digital security.

Sensity AI is reshaping digital media trust in the generative AI age, helping organizations stay ahead of AI-powered threats with cutting-edge detection and real-time monitoring.

5. Reality Defender

Reality Defender is a multi-model deepfake detection platform designed to analyze AI-generated content across video, images, audio, and text. Unlike traditional tools that rely on watermarks or prior authentication, Reality Defender uses probabilistic detection, allowing it to spot deepfake manipulation in real-world scenarios.

This platform is widely used in government, media, and financial sectors to combat voice impersonation, document forgery, and AI-generated disinformation. It has also been adopted by public broadcasting companies in Asia and multinational banks, helping to prevent identity fraud and synthetic media threats.

Reality Defender has gained major industry recognition, securing $15 million in Series A funding and being named a top finalist at the RSAC 2024 Innovation Sandbox, a leading cybersecurity competition. It offers real-time screening tools capable of instantly detecting AI-altered content, making it a valuable defense against AI-powered deception.

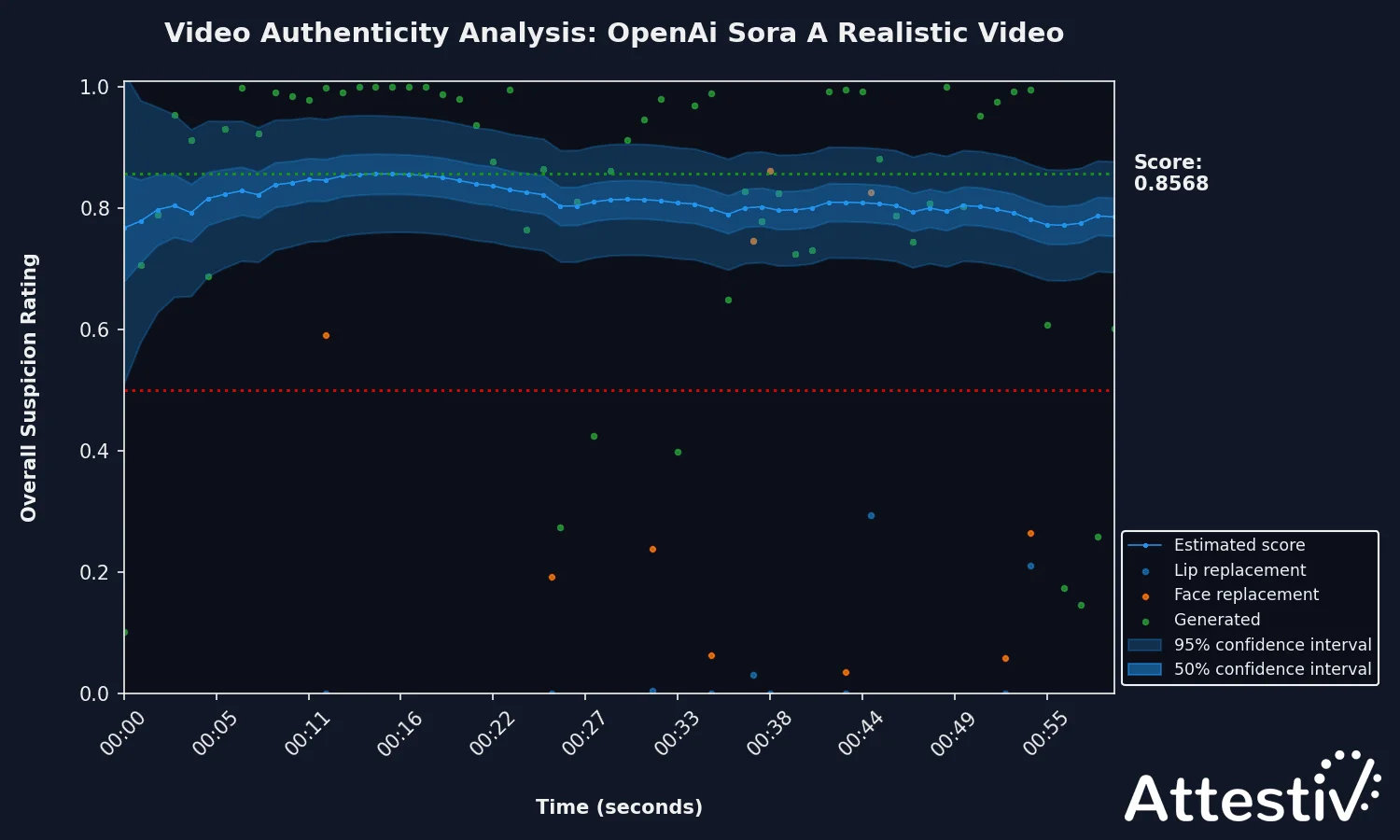

6. Attestiv Deepfake Video Detection Software

Attestiv is an AI-powered deepfake detection platform that specializes in video authentication and forensic analysis. Designed for both businesses and individuals, Attestiv helps detect deepfakes, AI-generated alterations, and suspicious edits in videos.

A video analysis showing the video is likely to be deepfakes (Source: Attestiv)

Attestiv’s detection process includes forensic video scanning, which examines various aspects such as face replacements, lip-sync alterations, generative AI content, and suspicious edits. The platform assigns an Overall Suspicion Rating (1-100) to gauge the likelihood of manipulation, allowing users to quickly assess video authenticity.

A key feature of Attestiv is its proprietary fingerprinting technology, which assigns a unique digital signature to each video. These fingerprints are stored on an immutable ledger, ensuring any future modifications can be detected instantly.

Recently, Attestiv introduced Context Analysis, an advanced feature that examines video metadata, descriptions, and transcripts to uncover deepfake scams and AI-generated manipulations. This enhancement strengthens Attestiv’s ability to validate digital content and prevent misinformation, phishing attacks, and fraudulent media circulation.

The platform is accessible via web app and API integration, with a free version allowing up to five video scans per month. Businesses can upgrade to premium plans for enhanced scan fidelity, faster analysis, and enterprise-level security.

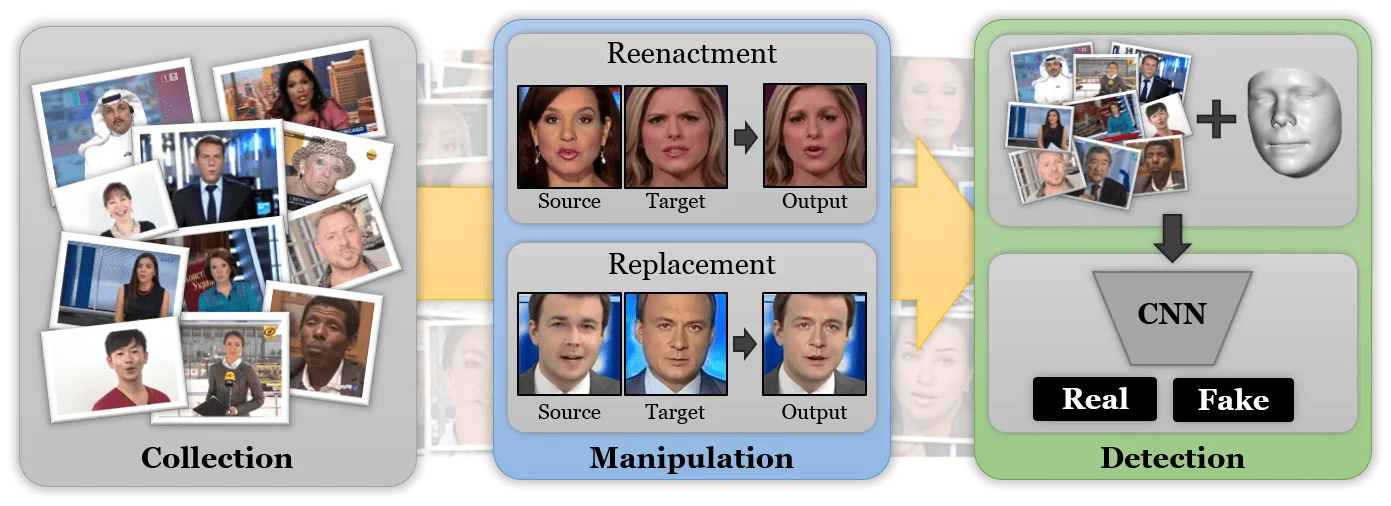

7. FaceForensics++

FaceForensics++ is an open-source benchmark dataset and deepfake detection framework designed to analyze and detect manipulated facial images and videos. It is widely used by researchers, cybersecurity firms, and AI developers to train and evaluate deepfake detection models.

FaceForensics++ work process

Developed by a team of researchers and first introduced in ICCV 2019, FaceForensics++ contains over 1.8 million manipulated images and 1,000 original video sequences sourced from YouTube. These videos have been altered using four primary deepfake techniques: DeepFakes, Face2Face, FaceSwap, and NeuralTextures. Additionally, it hosts the Deep Fake Detection Dataset, contributed by Google and Jigsaw, containing over 3,000 manipulated videos from 28 actors.

As an open-source project, FaceForensics++ provides free access to its dataset and tools, allowing developers to improve deepfake detection algorithms. It also offers an automated benchmark to test facial manipulation detection methods under various compression levels, ensuring real-world applicability.

With the growing sophistication of deepfake generation, FaceForensics++ continues to expand its dataset and evaluation methods, making it a crucial resource in the fight against AI-generated deception.

8. Pindrop Security

Pindrop Security specializes in audio deepfake detection, offering real-time AI-generated speech analysis for call centers, media organizations, and government agencies. Its flagship deepfake detection tool, Pindrop Pulse, can identify synthetic voices in just two seconds with 99% accuracy, making it one of the fastest and most precise solutions available.

Pindrop audio deepfake detection main page

Pindrop’s deepfake detection system is backed by over a decade of voice security research and trained on a proprietary dataset of 20 million audio files, including 350+ deepfake generation tools across 40+ languages. The technology works by analyzing voice patterns, tone shifts, and digital artifacts that are imperceptible to the human ear.

Recently, Pindrop launched Pulse Inspect, a tool that enables users to upload digital audio files to determine whether they contain AI-generated speech. The system provides deepfake scores, helping organizations assess the authenticity of voice recordings and combat misinformation, election-related fraud, and impersonation scams.

Pindrop’s technology has already been used to analyze high-profile deepfakes, such as fake robocalls impersonating President Joe Biden and a manipulated recording of Kamala Harris. By integrating with Pindrop Protect for fraud detection and Pindrop Passport for secure authentication, Pulse offers a comprehensive security suite to safeguard businesses against the growing threat of AI-generated voice fraud.

9. Cloudflare Bot Management

Cloudflare Bot Management is a cloud-based security solution that detects and mitigates malicious bot activity in real time. Leveraging data from 25 million internet properties, it uses machine learning, behavioral analysis, and device fingerprinting to differentiate between legitimate traffic and harmful bots.

Cloudflare’s system scores every request, identifying threats such as credential stuffing, content scraping, inventory hoarding, and DDoS attacks. Unlike traditional bot detection tools, it automatically maintains a list of “good” bots, such as search engine crawlers, to prevent false positives.

With simple deployment and no complex configuration, Cloudflare Bot Management offers automated rule recommendations and integrates with existing security infrastructures. It provides detailed analytics and logs, allowing businesses to review traffic patterns and refine security policies.

By blocking automated threats while ensuring a smooth experience for real users, Cloudflare Bot Management helps protect businesses from fraud, data breaches, and competitive abuse with minimal impact on performance.

10. AI Voice Detector

AI Voice Detector is an AI-powered tool designed to detect cloned voices and deepfake audio across various platforms, including YouTube, WhatsApp, TikTok, Zoom, and Google Meet. It also supports multiple languages and accents, making it highly adaptable for global users.

AI Voice Detector file upload

The tool works by analyzing voice patterns, background noise, and audio artifacts that indicate AI manipulation. It also includes an integrated noise and music remover, improving detection accuracy even in short audio clips of under seven seconds. Users can upload audio files or use a browser extension for real-time verification.

AI Voice Detector has identified over 90,000 AI-generated voices, helping individuals and businesses protect themselves from scams, fraud, and misinformation. The tool has been used to detect real cases of AI voice fraud, including a $25.6 million deepfake scam and impersonation attempts in financial fraud cases.

While no AI detection tool is foolproof, AI Voice Detector continues to update its models to stay ahead of evolving voice cloning technologies. Its API integration allows businesses to incorporate deepfake detection into their security workflows, providing an extra layer of protection against AI-powered deception.

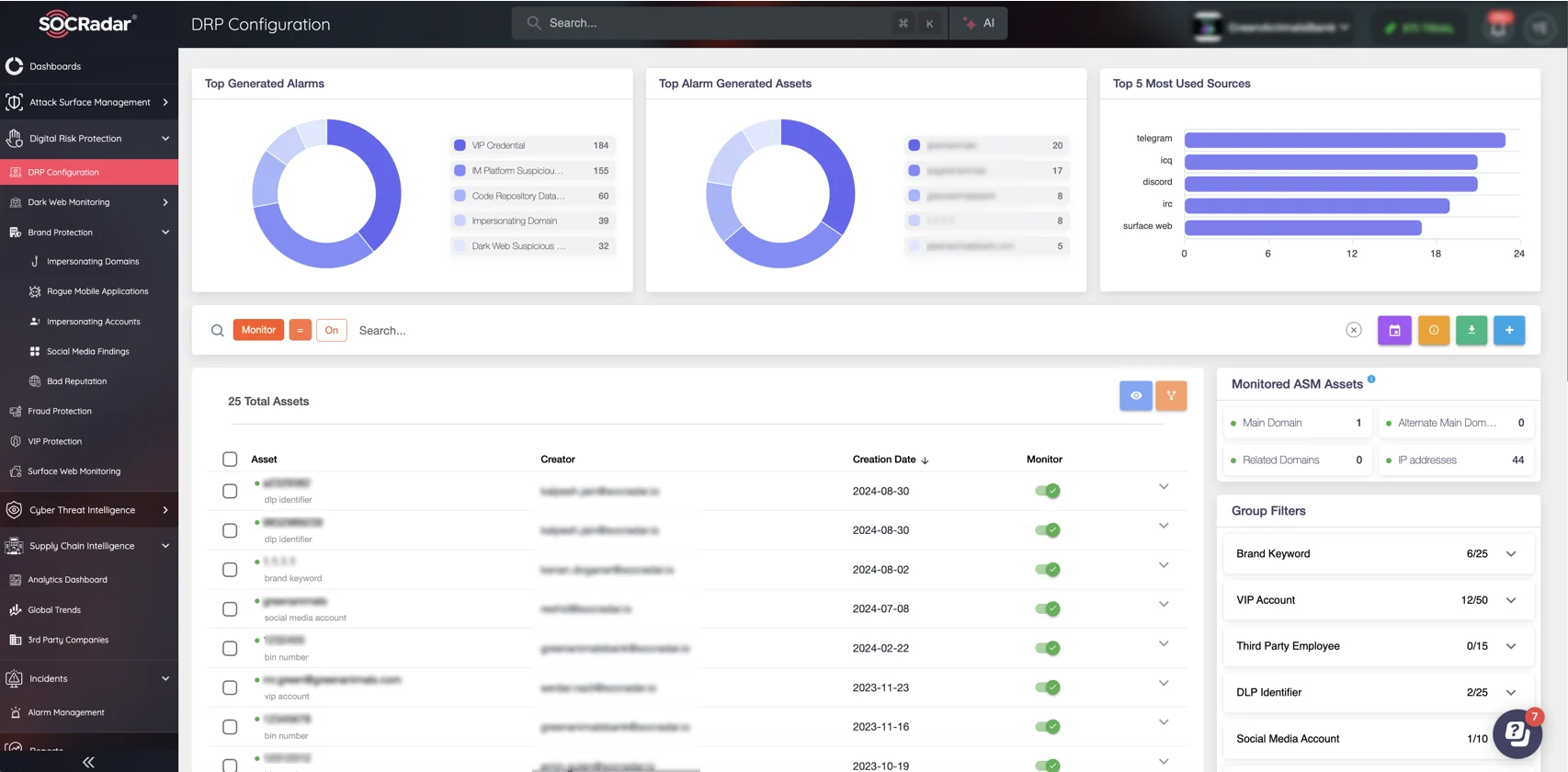

Stay Ahead of Digital Threats with SOCRadar’s Digital Risk Protection (DRP) Module

SOCRadar’s Digital Risk Protection (DRP) module is essential for those combating AI-driven threats like deepfakes, impersonation scams, and data leaks. As cybercriminals increasingly use AI for fraud and misinformation, SOCRadar’s DRP provides real-time monitoring of dark web forums, social media, and underground marketplaces to detect stolen credentials, fake accounts, and AI-generated scams before they spread.

SOCRadar’s DRP dashboard detects scams and digital threats in real time

With automated alerts and advanced threat intelligence, security teams can quickly respond to emerging risks, preventing financial and reputational damage. For those invested in AI detection tools, SOCRadar’s DRP adds an extra layer of defense by identifying AI-powered cyber threats at their source, ensuring businesses stay ahead of evolving digital risks.