Cybersecurity Implications of Deepfakes

The year 2024 is an important year in cybersecurity. With fast developments in technology, cyber threats have evolved to use more modern tactics, such as deepfakes, which lead to widespread misinformation, identity theft, and cyberattacks.

Deepfakes are a type of Artificial Intelligence (AI) that can generate persuasively realistic but entirely fake content. They are created using a type of machine learning called deep learning, hence the name ‘deepfake’. This technology enables the manipulation of audio, video, and images, resulting in content that is nearly identical to the original.

Deepfake technology poses a significant new threat to cybersecurity. The fight against deepfakes will be critical for preserving the integrity of our digital identities and the trust that underpins all cybersecurity. The question is not whether the threat can be completely eliminated, but rather how we can adapt our strategies, systems, and policies to deal with it.

Prevalence and Impact of Deepfakes in Cyberattacks

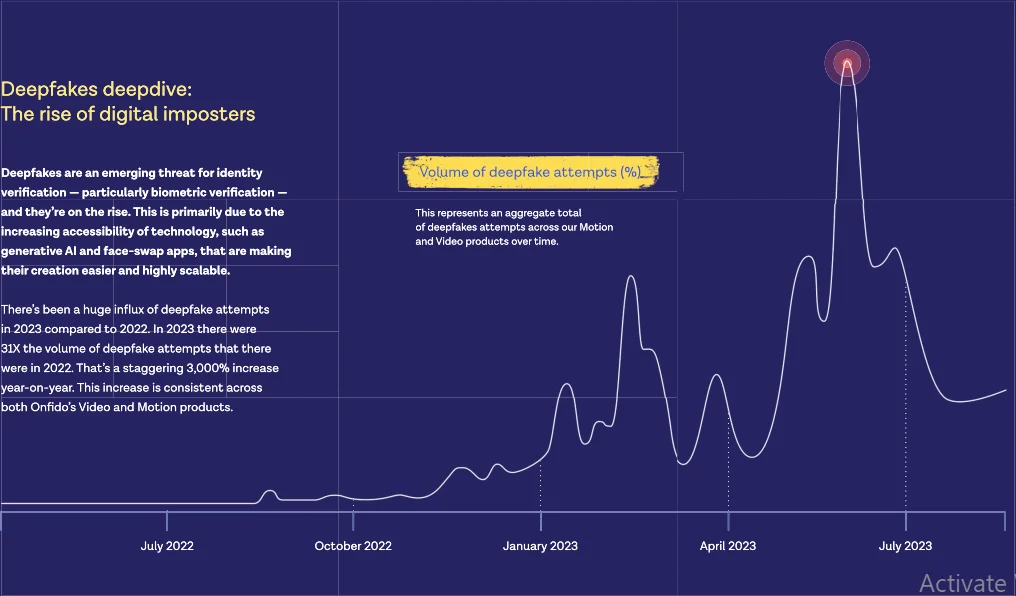

Cybersecurity professionals are currently reporting a significant increase in the use of deepfakes in cyberattacks. Onfido’s Identity Fraud Report 2024 reveals an outstanding 3,000% increase in deepfake attempts in 2023 compared to the previous year. Given the rapid evolution of AI technology, it is reasonable to expect that the use of deepfakes will grow and become more effective in the near future.

Deepfakes attempts in recent years (source: Identity Fraud Report 2024)

It is critical to first raise awareness. Individuals and organizations must prioritize understanding the potential threats that deepfakes pose, as well as the countermeasures required to mitigate these risks. So far, deepfakes have primarily been used to spread misinformation and commit identity theft.

Deepfakes Misinformation and Identity Theft

Deepfakes have been used in misinformation campaigns, with AI-generated content used to spread false information. This has been observed in cases where deepfake videos were distributed as part of state-sponsored information campaigns. These deepfakes’ realistic nature makes them a powerful tool for spreading misinformation, with potentially disastrous consequences.

In addition to political misinformation, deepfakes have been used to spread fake news and other forms of disinformation. This includes creating fictitious people and events in order to manipulate public opinion or cause confusion and chaos.

Deepfakes have also been used extensively in the field of identity theft. People are sharing more of their personal data online, increasing their digital footprint, which can be used to create deepfake content that mimics their identity. This is especially problematic for those who are vulnerable to identity theft, as deepfakes can convincingly mimic identities such as a country’s president, a company’s CEO, social media celebrities, and influencers. Here are some examples of how deepfakes can be used for misinformation and identity theft:

- They can be used to create fake advertising, which involves creating a deepfake video that appears to show a celebrity or public figure praising a product or service without their permission. This can be used to trick customers and harm the reputation of the person being impersonated. One of the most recent examples is Oprah Winfrey and Piers Morgan being used to advertise an influencer’s self-help course using deepfake.

Piers Morgan deepfake video (source: BBC news)

- Deepfakes can be used to commit a variety of frauds, including new account creation and account takeover. Deepfakes can be used by fraudsters to create a fake identity and open a new account for multiple scams. Deepfakes can also be used to take over existing accounts by tricking systems that rely on voice or facial recognition.

- Deepfake vishing (voice phishing), is used to replicate voices for fraudulent phone calls. This can be enhanced by combining it with deepfake videos, making the fraud even more convincing. A successful deepfake vishing operation has the potential to result in significant scams. A notable example occurred at a Hong Kong bank, where a finance employee was tricked into making a 25 Million dollar transaction. The fraudsters used deepfake technology to simulate a video call with the bank’s CEO.

- It can be used for cyber espionage purposes, such as gathering sensitive information. This could include creating a deepfake video of a high-ranking official in order to gain access to secure facilities or systems, or tricking employees into disclosing sensitive information.

Deepfakes in Election Landspace

Deepfakes are a significant threat in politics, especially as we approach the 2024 elections. A prime example is the general election in Slovakia in September 2023, which served as a stark reminder of how deepfake technology can be abused. During the election, deepfakes were created to simulate voice conversations between members of specific political parties discussing how to rig the votes. This incident tarnished the parties’ reputations, demonstrating the potential for deepfakes to cause significant political damage.

AI illustration for deepfakes in elections

The prevalence of deepfakes has complicated elections, making it more difficult for people to tell what is true and what is not. Even if deepfake content is detected and identified, there is no guarantee that the damage it has caused can be fully reversed. This situation has complicated the preservation of election integrity and the dissemination of accurate information.

For example, consider Indonesia’s 2024 Elections. This election saw the spread of deepfakes across multiple platforms, including manipulated voice recordings of candidates speaking Arabic. These deepfakes were designed to give the impression that the candidates held strong Islamic beliefs, which influenced public opinion.

Looking ahead, the upcoming Spanish elections are expected to pose similar cybersecurity challenges. For more information on this topic, visit our blog post 2024 Elections: Cybersecurity Challenges in Spain and Beyond.

The Fast Growth of Deepfakes

As the technology behind deepfakes advances, the threat to cybersecurity is expected to grow. Deepfakes have become more advanced and difficult to detect as generative Artificial Intelligence (genAI) and Large Language Models (LLMs) progress. Concerns have been increasing about the potential negative impact of unethical use of deepfakes.

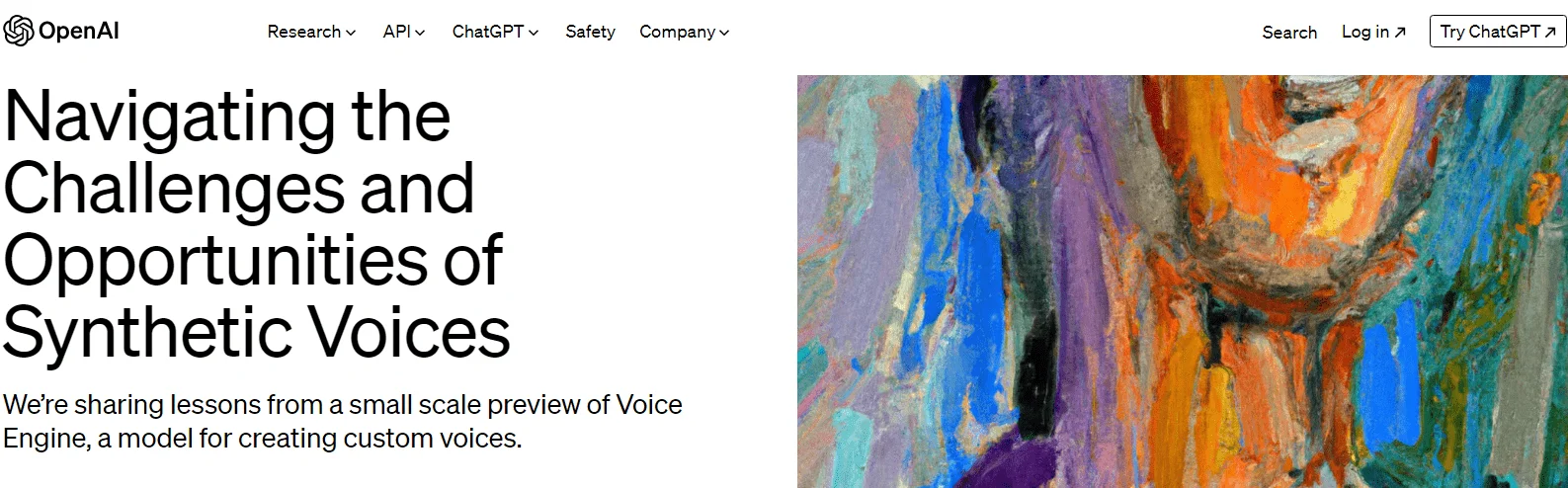

OpenAI’s new model, known as Voice Engine, represents a recent advancement in the field. This innovative technology can replicate someone’s voice after analyzing only a 15-second audio clip, and it does so with remarkable accuracy. This development has the potential to make phishing attempts more effective and easier to carry out, increasing the risk of identity theft.

OpenAI’s Voice Engine model

As the technology behind deepfakes improves, the threat to identity security is expected to increase. Businesses and individuals will need to be proactive in protecting themselves from deepfakes

Strategies to Defend Against and Combat Deepfakes

Detecting deepfakes can be difficult, especially if the impersonation shows the person acting in a reasonable manner. It’s even more difficult when the situation shifts to a medium we’re more familiar with, such as a phone call from a manager, client, or CEO.

Deepfakes are a serious threat to cybersecurity, and it is critical to develop strategies to defend against and combat them. Here are some of the suggested strategies:

Public Engagement and Open Communication:

Public engagement is a key strategy for combating deepfakes. This involves making it easier for people to understand where a specific piece of information came from, how it was created, and whether it is reliable.

Encourage open communication within an organization to help prevent fraud schemes. If an employee receives a suspicious request, they should feel free to discuss it with their coworkers or supervisors. This can help detect potential deepfake attacks earlier.

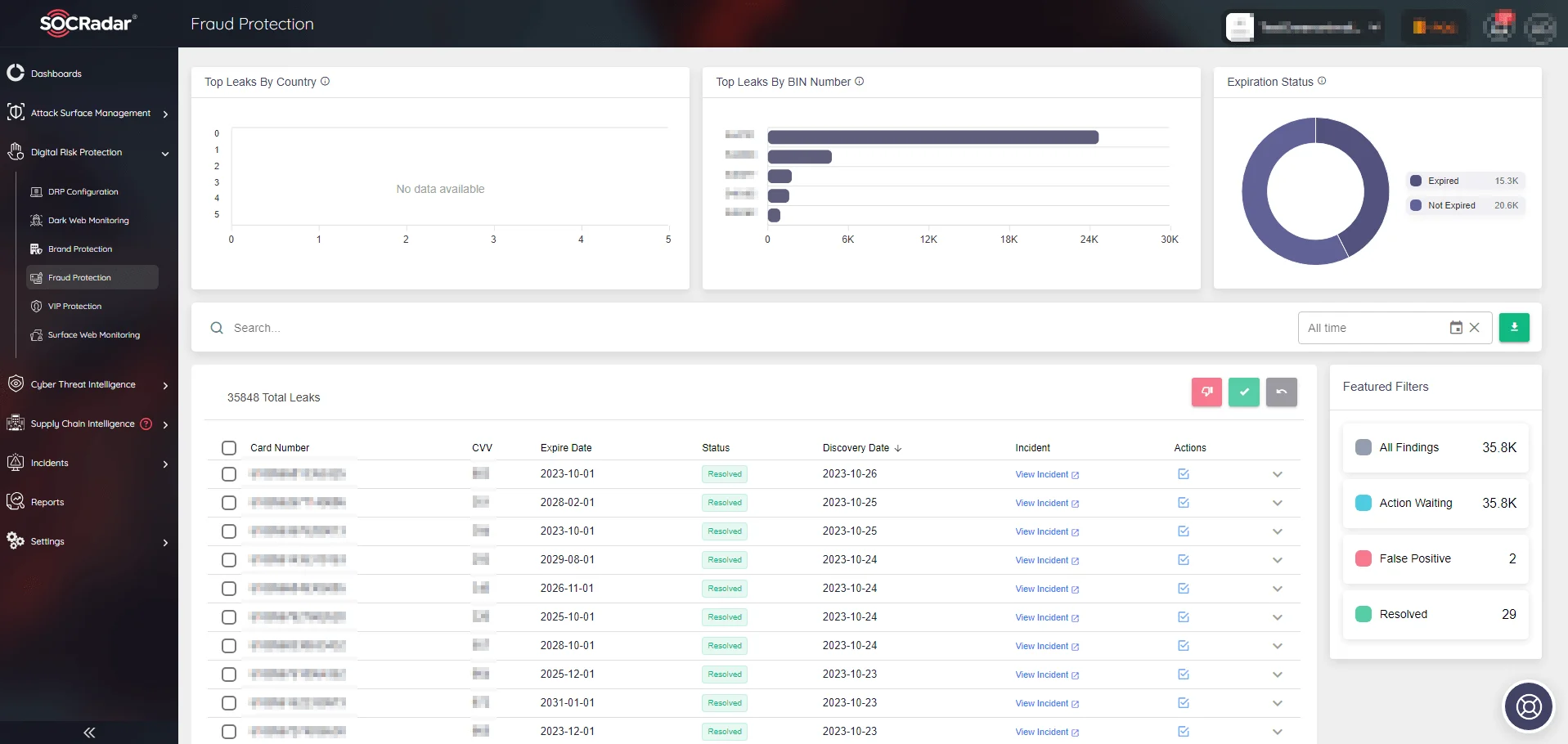

As deepfakes become a more common tool for threat actors to deceive people and commit fraud, it is critical to strengthen your defenses. SOCRadar’s Fraud Protection module constantly monitors the internet, scanning for malicious activity that targets your brand. It uses advanced technology to detect and alert you to fraud attempts on social media, phishing sites, black markets, and communication channels, thereby securing your digital identity.

SOCRadar’s Fraud Protection module

Defined Deepfake Protocol:

Having a defined deepfake protocol can reduce response time and protect a company/celebrity image. This includes having a clear plan of action for when a deepfake is discovered, such as steps to verify the content, notify relevant parties, and respond publicly if necessary.

Defend with AI:

If the attackers use AI in their offense, the defenders must do the same. AI technologies can be used to identify minor voice and image changes that may indicate a deepfake. This can help detect deepfakes before they cause harm.

Blockchain Technology:

Blockchain technology can provide greater transparency into the lifecycle of content. This can be achieved by hashing the posted video with some algorithms and then saving it, allowing it to be compared for authenticity in the event of deepfakes. This can help to rebuild trust in the digital ecosystem while limiting the reach and scale of deepfake technology.

Ai illustration of Blockchain in deepfakes

Multifactor Authentication (MFA):

Creating a Multi-factor Authentication (MFA) process can help to prevent attacks. This could include verbal and internal approval systems, which add an extra layer of security and make it more difficult for deepfakes to succeed.

Zero Trust Maturity Model:

The Cybersecurity and Infrastructure Security Agency (CISA) suggests the Zero Trust Maturity Model, which can help mitigate deepfake attacks. This model presumes that all traffic, internal or external, is a threat until proven otherwise. This can help detect and prevent deepfake attacks before they cause harm.

Zero Trust Maturity Model site page in CISA

Conclusion

In the fast-paced digital world of 2024, the rise of deepfakes has added another layer to cybersecurity threats. From misinformation campaigns to identity theft, the consequences of this innovative technology are far-reaching. Not only are fraudsters evolving, but the technology behind deepfakes is also rapidly advancing, with tools like OpenAI’s Voice Engine making deception easier and more convincing than ever.

However, as daunting as the threat of deepfakes may appear, it is not insurmountable. We can significantly reduce the risks posed by deepfakes by raising awareness, implementing defensive strategies, leveraging the power of artificial intelligence, and encouraging open communication.

The rise of deepfakes highlights the importance of maintaining constant surveillance and taking proactive cybersecurity measures. As we progress into the digital age, it becomes clear that our cybersecurity strategy must be as dynamic and adaptable as the threats we face.