CISO Guide to Deepfake Scams

Initially perceived as a novelty, deepfakes have evolved into sophisticated fraud, misinformation, and manipulation tools. Utilizing artificial intelligence and machine learning to create incredibly realistic fake videos, images, and sounds, deepfakes pose a severe threat to organizations and individuals worldwide.

The processing time and available hardware capacity limit the realism of deepfake videos, images, or audio created using artificial intelligence. This technological progression means that what once took hours or days to produce can now be created with high-end hardware in a matter of minutes.

From Ukraine’s AI-generated foreign ministry spokesperson to deepfake cryptocurrency scams involving videos of Singapore’s prime minister, it is evident that AI-generated content is being increasingly utilized for both legitimate and illicit purposes.

As technology continues to advance, so do the threats it opposes, meaning that we may be stepping into a dystopia where what we see with our own eyes or hear with our own ears can no longer be trusted. These implications profoundly affect trust in media, public figures, and personal relationships.

“What is real?” referencing “The Matrix” movie – Source: arstechnica

The Rising Threat of Deepfakes

Deepfakes have evolved from novelty creations to sophisticated tools for fraud, disinformation, and manipulation. Recent incidents underscore the severity of this threat.

ARUP Defrauded of $25 Million in Deepfake Scam:

ARUP Headquarters – Source ESG News

In February 2024, ARUP, a British multinational design and engineering firm, was defrauded of $25 million in a highly elaborate deepfake scam. The fraudsters employed advanced AI technology to create a remarkably realistic audio deepfake of ARUP’s CFO. This sophisticated impersonation convinced an employee to transfer a substantial sum to a fraudulent bank account, exploiting the perceived authenticity of the CFO’s voice. The funds were swiftly moved offshore, leaving little chance for recovery.

The incident exposed significant vulnerabilities in ARUP’s verification processes. The employee believed the instructions were legitimate due to the exceptional quality of the fake audio. In response, ARUP has committed to strengthening its security measures and is thoroughly reviewing its verification protocols. The company is now aiming to implement multifaceted authentication processes, including the use of biometrics and AI-based anomaly detection, to safeguard against future threats. This case underscores the critical need for continuously enhancing cybersecurity strategies in the face of evolving digital threats.

Deepfake Video of Singapore’s Prime Minister:

A screenshot from the deepfake video – Source:CNA

In December 2023, a deepfake video surfaced depicting Singapore’s Prime Minister Lee Hsien Loong promoting a cryptocurrency scam, which rapidly gained viral attention. In this manipulated video, Prime Minister Lee is seen being interviewed by a presenter from the Chinese news network CGTN. They discuss an investment opportunity purportedly endorsed by the Singapore government, referring to it as a “revolutionary investment platform designed by Elon Musk.” The video concludes with the presenter urging viewers to click on a link to register for the platform and earn “passive income.”

The video appears to have been altered from CGTN’s actual interview with Prime Minister Lee in Singapore in March 2023. This incident raised significant concerns regarding the potential misuse of deepfake technology for spreading false information and deceiving the public. The sophisticated manipulation techniques used in the video underscored the need for enhanced measures to effectively detect and counter such malicious content. Authorities swiftly responded to the incident, emphasizing the importance of media literacy and cybersecurity awareness in combating the proliferation of deepfake media.

Following the incident, Prime Minister Lee Hsien Loong shared a warning video on his Facebook account, stating:

“On top of mimicking my voice and layering the fake audio over actual footage of me making last year’s National Day Message, scammers even synced my mouth movements with the audio.

This is extremely worrying – people watching the video may be fooled into thinking that I really said those words.”

Around the same time as the deepfake video involving Prime Minister Lee Hsien Loong, another fake video emerged, this time featuring Deputy Prime Minister Lawrence Wong endorsing an investment scam.

A screenshot from the deepfake video – Source:ANN

The video’s spread highlighted the vulnerability of public figures to exploitation through manipulated media and underscored the urgency of implementing robust authentication mechanisms for digital content. Singaporean authorities swiftly launched investigations into the origin of the video and intensified efforts to educate the public about the risks associated with deepfake technology. Additionally, this incident prompted discussions on regulatory frameworks aimed at addressing the growing threat of deepfake manipulation in the digital landscape.

Deepfake Robocall Targets New Hampshire Primary Voters

In February 2024, an AI-generated robocall featuring a synthetic voice of President Joe Biden was used to manipulate the New Hampshire primary elections. This sophisticated operation involved creating an audio deepfake that convincingly mimicked President Biden’s voice, urging voters to abstain from participating in the primary by falsely suggesting that their votes would not be counted. The malicious campaign was orchestrated by a political operative, leveraging advanced AI technology to craft a highly realistic audio.

US President Joe Biden speaking in the East Room of White House – Source: The Seattle Times

The robocall exploited the perceived authenticity of Biden’s voice, resulting in confusion and mistrust among voters. Due to the high-quality audio, it was challenging for recipients to recognize the fraudulent nature of the message. This incident revealed significant vulnerabilities in the communication systems used during elections. In response, the Federal Communications Commission (FCC) launched an investigation to trace the origins of the robocall and emphasized the importance of media literacy and cybersecurity awareness to combat the misuse of deepfake technology in political campaigns.

Following the incident, the FCC stressed the need for stringent regulations on the use of AI in political messaging. Authorities also highlighted the importance of developing robust countermeasures, such as AI-based anomaly detection systems, to identify and mitigate the risks posed by deepfakes. This case underscores the critical need for continuous advancements in cybersecurity strategies and public awareness to protect the integrity of electoral processes against the evolving threats posed by digital technologies.

How Deepfakes Work

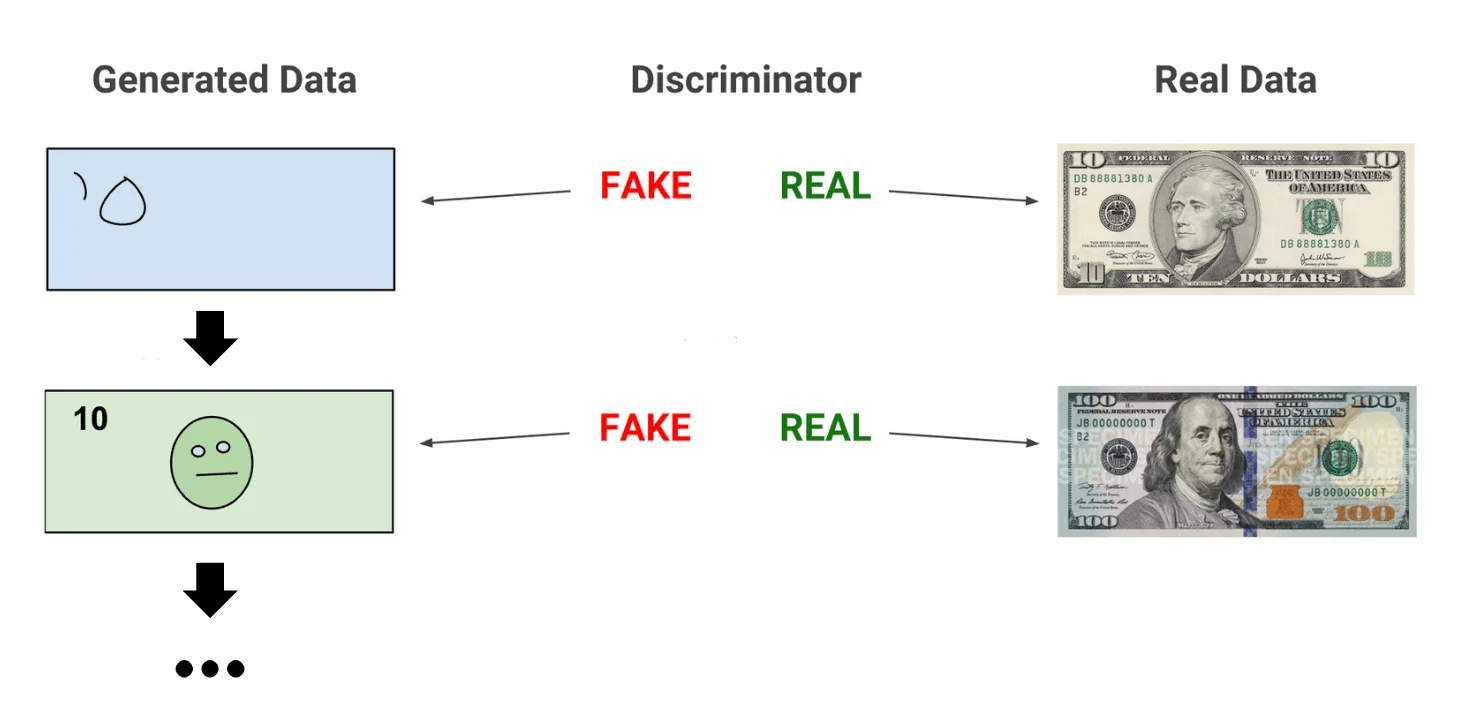

The technology behind deepfakes hinges on advanced deep learning, a specialized branch of artificial intelligence. Central to this process are Convolutional Neural Networks (CNN) and Generative Adversarial Networks (GAN), which collaborate to process and generate lifelike images and videos.

CNNs excel at recognizing and replicating patterns within images, such as facial features, providing the foundational recognition capability necessary for creating deepfakes. GANs, however, are the true powerhouse behind the realistic generation of synthetic media. They consist of two neural networks: a generator and an adversary. The generator, trained with data representing the desired type of content, strives to create new examples that mimic the characteristics of the original data.

How GANs work – Source: developers.google

These generated samples are then scrutinized by the adversary network, which has been trained to detect flaws and identify fakes. This adversarial network rejects any examples it deems inauthentic, feeding them back to the generator for refinement. This iterative process continues until the generator produces content the adversary can no longer distinguish from reality.

Deepfake Detection and Prevention Strategies

Chief Information Security Officers (CISOs) must implement a multi-layered strategy to effectively counter deepfake threats, focusing on message evaluation, audio-visual analysis, and authentication tools.

Message Evaluation

- Source Verification: Ensure the source’s legitimacy by cross-referencing with official channels. Trusted sources should be easily verifiable.

- Context Analysis: Validate whether the content aligns with expected behaviors. Unusual messages from public figures, such as investment solicitations, should raise suspicion.

- Purpose Scrutiny: Be wary of messages demanding immediate, unsafe, or unusual actions, like downloading unknown apps, clicking suspicious links, or providing personal details. High-pressure tactics are often a sign of fraud.

Audio-Visual Analysis

- Facial Features: Identify unnatural blurring, shadows, or inconsistencies around facial features. Lighting and resolution mismatches are key indicators.

- Expression and Eye Movement: Look for unnatural or infrequent blinking, inconsistent light reflections in the eyes, and odd facial expressions. Authentic movements are challenging for deepfakes to replicate perfectly.

- Audio-Video Sync: Ensure the synchronization of lips with speech. Notice any limited tone variance or mismatched background noise, which can indicate manipulation.

- Background Consistency: Detect any blurred, out-of-focus, or distorted areas in the background, as inconsistencies here can also signal deepfake manipulation.

Authentication Tools

- Content Provenance: Utilize tools that verify the origin and authenticity of media, such as metadata tags or watermarks indicating AI-generated content. Tech companies are actively developing these features to identify manipulated media.

- Deepfake Detection Solutions: Deploy AI-based tools designed to detect deepfakes by analyzing digital content for signs of manipulation. Advanced techniques like pixel analysis and anomaly detection algorithms are increasingly effective in identifying deepfakes.

Technological Solutions and Best Practices

- Biometric Authentication: Use advanced biometric methods such as facial recognition, fingerprint scanning, and voice recognition to verify identities, significantly reducing the risk of deepfake-related fraud.

- AI-Driven Detection Tools: Incorporate sophisticated AI tools that analyze facial movements, audio consistency, and metadata. Integrating these tools into your security infrastructure can help proactively identify and mitigate deepfake threats.

- Employee Training and Awareness: Conduct regular training sessions to build a culture of vigilance. Educate staff about the latest deepfake technologies and provide guidelines for identifying suspicious content. Promote skepticism towards unsolicited communications and emphasize the importance of verifying sources before taking any action.

Legal and Regulatory Measures

Governments and regulatory bodies are increasingly addressing the deepfake threat. New regulations are being developed to combat AI-enabled scams, aiming to protect individuals and organizations from impersonation fraud. Guidelines and recommendations for detecting and mitigating the risks associated with deepfake technology are being provided by cybersecurity authorities.

By implementing these strategies and staying abreast of technological advancements, CISOs can better safeguard their organizations from the escalating threat posed by deepfake scams.

Conclusion

Deepfake technology, while a marvel of modern artificial intelligence, presents significant challenges and threats that must be addressed with urgency and sophistication. For CISOs, the fight against deepfake scams requires a multifaceted approach that integrates advanced technological solutions, comprehensive employee training, and proactive regulatory measures. Leveraging biometric authentication, and AI-driven detection tools can fortify defenses against these sophisticated threats. Furthermore, continuous education and awareness programs are vital in fostering a culture of vigilance and skepticism within organizations.

As deepfake technology continues to evolve, so must our strategies to counter it. By staying informed about the latest advancements and adopting innovative solutions, CISOs can better protect their organizations from the pernicious effects of deepfake scams. The journey ahead may be challenging, but with a concerted effort, we can mitigate the risks and safeguard the integrity of our digital landscape.